![[NASA Logo]](../Images/nasaball.gif)

![[NASA Logo]](../Images/nasaball.gif) |

NASA Procedures and Guidelines |

This Document is Obsolete and Is No Longer Used.

|

|

P.1 Purpose

P.2 Applicability

P.3 Authority

P.4 Applicable Documents and Forms

P.5 Measurement/Verification

P.6 Cancellation

1.1 Background

1.2 Framework for Systems Engineering Procedural Requirements

1.3 Guiding Principles of Technical Excellence

1.4 Framework for Systems Engineering Capability

1.5 Document Organization

2.1 Roles and Responsibilities Relative to System Engineering Practices

2.2 Tailoring and Customizing

3.1 Introduction

3.2 Common Technical Processes Requirements

4.1 Introduction

4.2 Prior to Contract Award

4.3 During Contract Performance

4.4 Contract Completion

5.1 Life-Cycle

5.2 Life-Cycle and Technical Review Requirement

6.1 Systems Engineering Management Plan Function

6.2 Technical Team Responsibilities

Figure 1-1 - Hierarchy of Related Documents

Figure 1-2 - Documentation Relationships

Figure 1-3 - Technical Excellence - Pillars and Foundation

Figure 1 4 - SE Framework

Figure 3 1 - Systems Engineering (SE) Engine

Figure 3-2 - Application of SE Engine Common Technical Processes Within System Structure

Figure 3-3 - Sequencing of the Common Technical Processes

Figure 3-4 - SE Engine Implemented for a Simple Single-Pass Waterfall-Type Life Cycle

Figure 5 1 - NASA Uncoupled and Loosely Coupled Program Life Cycle

Figure 5-2 - NASA Tightly Coupled Program Life Cycle

Figure 5-3 - NASA Single-Project Program Life Cycle

Figure 5 4 - The NASA Project Life Cycle

Figure A-1 - Enabling Product Relationship to End Products

Table 5-1 - SE Work Product Maturity

Table G-1 - SRR Entrance and Success Criteria for Programs

Table G-2 - SDR Entrance and Success Criteria for Programs

Table G-3 - MCR Entrance and Success Criteria

Table G-4 - SRR Entrance and Success Criteria

Table G-5 - MDR/SDR Entrance and Success Criteria (Projects and Single-Project Program)

Table G-6 - PDR Entrance and Success Criteria

Table G-7 - CDR Entrance and Success Criteria

Table G-8 - PRR Entrance and Success Criteria

Table G-9 - SIR Entrance and Success Criteria

Table G-10 - TRR Entrance and Success Criteria

Table G-11 - SAR Entrance and Success Criteria

Table G-12 - ORR Entrance and Success Criteria

Table G-13 - MRR/FRR Entrance and Success Criteria

Table G-14 - PLAR Entrance and Success Criteria

Table G-15 - CERR Entrance and Success Criteria

Table G-16 - PFAR Entrance and Success Criteria

Table G-17 - DR Entrance and Success Criteria

Table G-18 - Disposal Readiness Review Entrance and Success Criteria

Table G-19 - Peer Review Entrance and Success Criteria

Table G-20 - PIR/PSR Entrance and Success Criteria

Table G-21 - DCR Entrance and Success Criteria

Table J-1 - Deleted Requirements and Justification

|

|

|

|

|

1 |

01/19/2021 |

Updated with admin changes: Section 3.1.5.9 Editorial fix; Section 5.2.2.4 Reference fix; Appendix A "Will" to "can" in Engineering Unit definition; Appendix G Changes to Table G-9; Success Criteria 7 and 8, Table G-12; Entrance Criteria 9.d, and Table G-13, Entrance criteria 7.e |

|

2 |

02/22/2022 |

Updated with admin changes: Appendix E, TRL, delete example b. under TRL 2 |

This document establishes the NASA processes and requirements for implementation of Systems Engineering (SE) by programs/projects. NASA SE is a logical systems approach performed by multidisciplinary teams to engineer and integrate NASA's systems to ensure NASA products meet the customer's needs. Implementation of this systems approach will enhance NASA's core engineering capabilities while improving safety, mission success, and affordability. This systems approach is applied to all elements of a system (i.e., hardware, software, and human) and all hierarchical levels of a system over the complete program/project life cycle.

a. This NASA Procedural Requirement (NPR) applies to NASA Headquarters and NASA Centers, including component facilities and technical and service support centers. This NPR applies to NASA employees and NASA support contractors that use NASA processes to augment and support NASA technical work. This NPR applies to the Jet Propulsion Laboratory (JPL), a Federally Funded Research and Development Center, other contractors, grant recipients, or parties to agreements only to the extent specified or referenced in the appropriate contracts, grants, or agreements. (See Chapter 4.)

b. This NPR applies to air and space flight, research and technology, information technology (IT), and institutional programs and projects. Tailoring the requirements in this NPR and customizing practices, based on criteria such as system/product size, complexity, criticality, acceptable risk posture, and architectural level, is necessary and expected. See Section 2.2 for tailoring and customizing descriptions. For IT programs and projects, see NPR 7120.7 for applicable SE tailoring.

c. In this document, projects are viewed as a specific investment with defined goals, objectives, and requirements, with the majority containing a life-cycle cost, a beginning, and an end. Projects normally yield new or revised products or services that directly address NASA strategic needs. They are performed through a variety of means, such as wholly in-house, by Government, industry, international or academic partnerships, or through contracts with private industry.

d. The requirements enumerated in this document are applicable to all new programs and projects, as well as to all programs and projects currently in the Formulation Phase, as of the effective date of this document. (See NPR 7120.5, NASA Space Flight Program and Project Management Requirements; NPR 7120.7, NASA Information Technology and Institutional Infrastructure Program and Project Management Requirements; or NPR 7120.8, NASA Research and Technology Program and Project Management Requirements; for definitions of program phases.) This NPR also applies to programs and projects in their Implementation Phase as of the effective date of this document. For existing programs/projects regardless of their current phase, waivers or deviations allowing continuation of current practices that do not comply with one or more requirements of this NPR, may be granted using the Center's Engineering Technical Authority (ETA) Process.

e. Many other discipline areas perform functions during the program/project life cycle and influence or are influenced by the engineering functions performed and, therefore, need to be fully integrated into the SE processes. These discipline areas include but are not limited to health and medical, safety, reliability, maintainability, quality assurance, IT, cybersecurity, logistics, operations, training, human system integration, planetary protection, and environmental protection. The description of these disciplines and their relationship to the overall program/project management life-cycle are defined in other NASA directives; for example, the safety, reliability, maintainability, and quality assurance requirements and standards are defined in the Office of Safety Mission Assurance (OSMA) directives and standards, and health and medical requirements are defined in the Office of the Chief Health and Medical Officer (OCHMO) directives and standards. For example, see NASA-STD-3001, NASA Space Flight Human System Standard Volume 1 and Volume 2, and NPR 8705.2, Human-Rating Requirements for Space Systems.

f. In this NPR, all mandatory actions (i.e., requirements) are denoted by statements containing the term "shall." The requirements are explicitly shown as [SE-XX] for clarity and tracking purposes as indicated in Appendix H. The terms "may" or "can" denote discretionary privilege or permission, "should" denotes a good practice and is recommended but not required, "will" denotes expected outcome, and "are/is" denotes descriptive material.

g. In this NPR, all document citations are assumed to be the latest version, unless otherwise noted.

a. National Aeronautics and Space Act, 51 U.S.C. § 20113(a).

b. NPD 1000.0, NASA Governance and Strategic Management Handbook.

c. NPD 1000.3, The NASA Organization.

d. NPD 1001.0, NASA Strategic Plan.

e. Government Contract Quality Assurance, 48 CFR, subpart 1846.4.

f. NPD 2570.5, NASA Electromagnetic Spectrum Management.

g. NPD 7120.4, NASA Engineering and Program/Project Management Policy.

h. NPR 1441.1, NASA Records Management Program Requirements.

i. NPR 2570.1, NASA Radio Frequency (RF) Spectrum Management Manual.

j. NPR 7120.5, NASA Space Flight Program and Project Management Requirements.

k. NPR 7120.7, NASA Information Technology and Institutional Infrastructure Program and Project Management Requirements.

l. NPR 7120.8, NASA Research and Technology Program and Project Management Requirements.

m. NPR 7150.2, NASA Software Engineering Requirements.

n. NPR 8000.4, Agency Risk Management Procedural Requirements.

o. NPR 8590.1, Environmental Compliance and Restoration Program.

p. NPR 8705.2, Human-Rating Requirements for Space Systems.

q. NPR 8705.5, Technical Probabilistic Risk Assessment (PRA) Procedures for Safety and Mission Success for NASA Programs and Projects.

r. NPR 8820.2, Facility Project Requirements (FPR).

s. NASA-HDBK-2203, NASA Software Engineering Handbook.

t. NASA-STD-3001, NASA Space Flight Human System Standard.

u. NASA/SP-2010-576, NASA Risk-Informed Decision Making Handbook.

v. NASA/SP-2011-3422, NASA Risk Management Handbook.

w. NASA/SP-2015-3709, Human Systems Integration (HSI) Practitioner's Guide.

x. NASA/SP-2016-6105, NASA Systems Engineering Handbook.

y. NASA/SP-2016-6105-SUPPL, Expanded Guidance for NASA Systems Engineering.

a. Compliance with this document is verified by the Office of the Chief Engineer by surveys, audits, reviews, and/or reporting requirements.

b. Compliance, including tailoring, for programs and projects is documented by appending a completed Compliance Matrix for Programs/Projects (see Appendix H) to the Systems Engineering Management Plan (SEMP) or other equivalent program/project documentation and by submitting the review products and plans identified in this document to the responsible NASA officials at the life-cycle and technical reviews. Programs and projects may substitute a matrix that documents compliance with their particular Center implementation of this NPR, if applicable.

P.6 Cancellation

NPR 7123.1B, NASA Systems Engineering Processes and Requirements, dated April 18, 2013.

1.1.1 Systems engineering at NASA requires the application of a systematic, disciplined engineering approach that is quantifiable, recursive, iterative, and repeatable for the development, operation, maintenance, and disposal of systems integrated into a whole throughout the life cycle of a project or program. The emphasis of SE is on safely achieving stakeholder functional, physical, operational, and performance (including human performance) requirements in the intended use environments over the system's planned life within cost and schedule constraints.

1.1.2 This NPR complements the NASA policy requirements for the administration, management, and review of all programs and projects, as specified in:

a. NPR 7120.5.

b. NPR 7120.7.

c. NPR 7120.8.

d. NPR 7150.2, NASA Software Engineering Requirements.

e. NPR 8590.1, Environmental Compliance and Restoration Program.

f. NPR 8820.2, Facility Project Requirements (FPR).

1.1.3 The processes described in this document build upon and apply best practices and lessons learned from NASA, other governmental agencies, and industry to clearly delineate a successful model to complete comprehensive technical work, reduce program and technical risk, and increase the likelihood of mission success. The requirements established in this NPR should be tailored and customized for criteria such as system/product size, complexity, criticality, acceptable risk posture, architectural level, development plans, and schedule following the guidance of Section 2.2.

1.1.4 Precedence

The order of precedence in case of conflict between requirements is 51 U.S.C. § 20113(a)(1), National Aeronautics and Space Act; NPD 1000.0, NASA Governance and Strategic Management Handbook; NPD 1000.3, The NASA Organization; NPD 7120.4, NASA Engineering and Program/Project Management Policy; and NPR 7123.1, NASA Systems Engineering Processes and Requirements.

1.1.5 Figures

1.1.5.1 Figures within this NPR are informational.

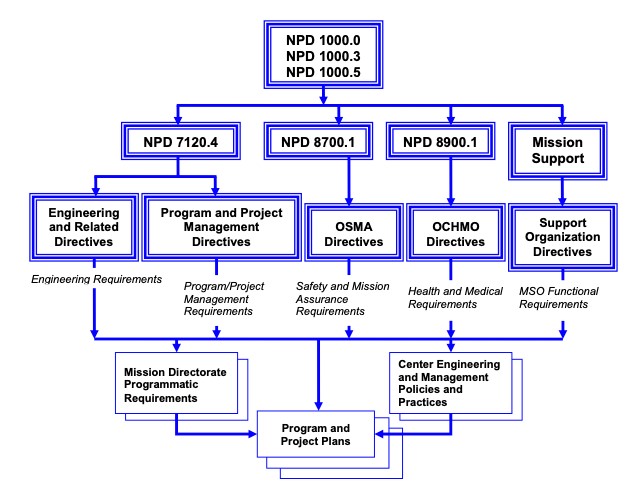

1.2.1 Institutional requirements are the responsibility of the institutional authorities. They focus on how NASA does business and are independent of any particular program or project. These requirements are issued by NASA Headquarters and by Center organizations and are normally documented in NASA Policy Directives (NPDs), NASA Procedural Requirements (NPRs), NASA Standards, Center Policy Directives (CPDs), Center Procedural Requirements (CPRs), and Mission Directorate (MD) requirements. Figure 1-1 shows the flow down from NPD 1000.0 through Program and Project Plans.

Figure 1-1 - Hierarchy of Related Documents

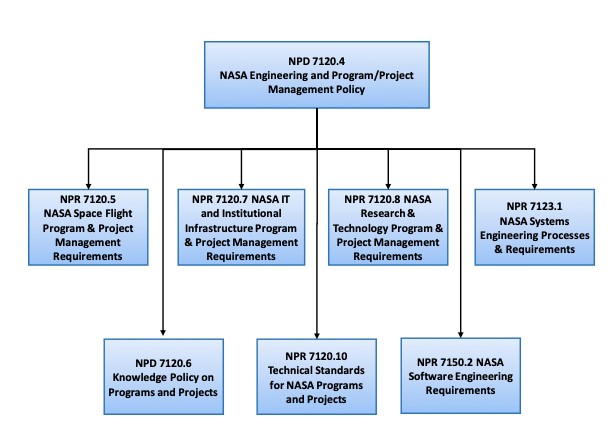

1.2.2 This NPR focuses on SE processes and requirements. It is one of several related Engineering and Program/Project NPRs that flow down from NPD 7120.4, as shown in Figure 1-2.

Figure 1-2 - Documentation Relationships

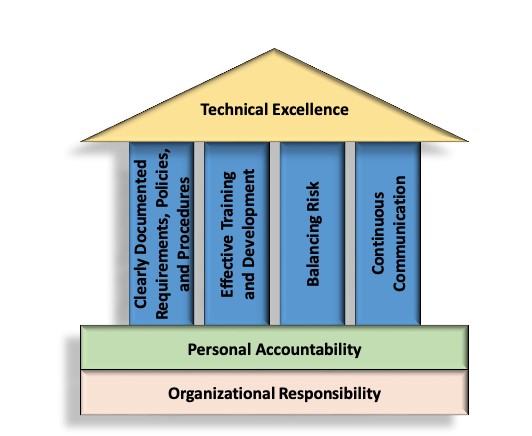

1.3.1 The Office of the Chief Engineer (OCE) provides leadership for technical excellence at NASA. As depicted in Figure 1-3, there are four pillars to achieving technical excellence and strengthening the SE capability. These pillars are intended to ensure that every NASA program and project meets the highest possible technical excellence.

Figure 1-3 - Technical Excellence - Pillars and Foundation

a. Clearly Documented Requirements, Policies, and Procedures. Given the complexity and uniqueness of the systems that NASA develops and deploys, clear policies and procedures are essential to mission success. All NASA technical policies and procedures flow directly from NPD 1000.0. Policies and procedures are only as effective as their implementation, facilitated by personal and organizational accountability and effective training. OCE ensures policies and procedures are consistent with and reinforce NASA's organizational beliefs and values. OCE puts in place effective, clearly documented policies and procedures, supplemented by guidance in handbooks and standards to facilitate optimal performance, rigor, and efficiency among NASA's technical workforce.

b. Effective Training and Development. NASA is fortunate that the importance of its mission allows it to attract and retain the most capable technical workforce in the world. OCE bears responsibility for providing this workforce with the technical training and development necessary to carry out the Agency's missions. At the Agency level, NASA's Academy of Program/Project and Engineering Leadership (APPEL) provides for the development of engineering leaders and teams within NASA. APPEL is augmented by technical leadership development at many Centers. Training consists of more than just transferring a set of skills. In addition to ensuring that NASA's technical workforce is knowledgeable about standards, specifications, processes, and procedures, the training available through APPEL and other curriculums is rooted in an engineering philosophy that grounds NASA's approach to technical work and decision making. These offerings give historical and philosophical perspectives that teach and reinforce NASA's organizational values and beliefs. OCE provides full support for training and development activities that will allow NASA to maximize the abilities of its technical workforce.

c. Balancing Risk. Risk is an inherent factor in any spacecraft, aircraft, or technology development. Proper risk management entails striking a balance between the tensions of program/project management and engineering independence. Engineering rigor cannot be sacrificed for schedules and budgets, and likewise programmatic concerns cannot be overlooked in the development of the technical approach to a given program or project; technical risk will be consciously and deliberately traded against budget and schedule. The Engineering Technical Authority (ETA) is responsible for ensuring risks are considered and good engineering practices are followed in technical development and implementation. OCE oversees all activities related to the exercise of ETA across the Agency. Section 2.1.6 of this document contains additional information on the ETA responsibilities.

d. Continuous Communications. Communication lies at the heart of all leadership and management challenges. Most major failures in NASA's history have stemmed in part from poor communication. Among the Agency's technical workforce, communication takes a myriad of forms: continuous risk management (CRM)/risk-informed decision making (RIDM), data sharing, knowledge management, knowledge sharing, dissemination of best practices and lessons learned, and continuous learning to name but a few. The complexity of NASA's programs and projects demands a rigorous culture of continuous and open communication that flourishes within the context of policies and procedures and knowledge transfer, while empowering individuals at all levels to raise concerns without fear of adverse consequences. OCE promotes a culture of continuous communications.

1.3.2 Personal and organizational accountability and responsibility lay the foundation for technical excellence.

a. Personal Accountability. Personal accountability means that each individual understands that he or she is responsible for the success of the mission. Each person, regardless of position or area of responsibility, contributes to success. What NASA does is so complex and interdependent that every component needs to work for the Agency to be successful. All of those who constitute NASA's technical community need to possess the knowledge and confidence to speak up when something is amiss in their or anyone else's area of responsibility to ensure mission success.

b. Organizational Responsibility. NASA's technical organizations have a responsibility to provide the proper training, tools, and environment for technical excellence. Providing the proper environment for technical excellence means establishing regular and open communication so that individuals feel comfortable exercising their personal responsibility. It also requires ensuring that those who prefer to remain in the technical field (instead of management) have a satisfying and rewarding career track (e.g., NASA Technical Fellows, ST/SL or GS-15 technical leads).

1.3.3 A central component of the environment for technical excellence is strengthening the SE capability.

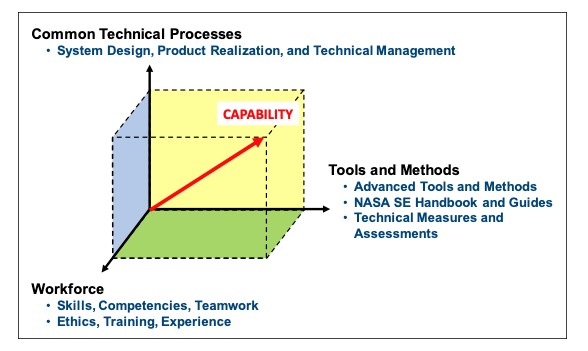

1.4.1 The framework for SE capability consists of three elements—the common technical processes, tools and methods, and training for a skilled workforce. The relationship of the three elements is illustrated in Figure 1-4. The integrated implementation of the three elements of the SE framework is intended to strengthen and improve the overall capability required for the efficient and effective engineering of NASA systems. Each element is described below.

Figure 1 4 - SE Framework

a. The common technical processes of this NPR provide what has to be done to engineer quality system products and achieve mission success. These processes are applied to the integration of hardware, software, and human systems as one integrated whole. This NPR describes the common SE processes as well as standard concepts and terminology for consistent application and communication of these processes across the Agency. This NPR, supplemented by NASA/SP-2016-6105, NASA Systems Engineering Handbook, and endorsed SE standards, also describes a structure for applying the common technical processes.

b. Tools and methods range from the facilities and resources necessary to perform the technical work to the clearly documented policies, processes, and procedures that allow personnel to work safely and efficiently. Tools and methods enable the efficient and effective completion of the activities and tasks of the common technical processes. The SE capability is strengthened through the infusion of advanced methods and tools into the common technical processes to achieve greater efficiency, collaboration, and communication among distributed teams. The NASA Systems Engineering Handbook is a resource for methods and tools to support the Centers' implementation of the required technical processes in their program/projects.

c. A well-trained, knowledgeable, and experienced technical workforce is essential for improving SE capability. The workforce will be able to apply NASA and Center tools and methods for the completion of the required SE processes within the context of the program or project to which they are assigned. In addition, they will be able to effectively communicate requirements and solutions to customers, other engineers, and management to work efficiently and effectively on a team. Issues of recruitment, retention, and training are aspects included in this element. The OCE will facilitate training the NASA workforce on the application of this and associated NPRs.

1.4.2 Improvements to SE capability can be measured through assessing and updating the implementation of the common technical processes, use of adopted methods and tools, and workforce engineering training.

1.5.1 This SE NPR is organized into the following chapters:

a. The Preface describes items such as the purpose, applicability, authority, and applicable documents of this NPR.

b. Chapter 1 describes the SE framework and document organization.

c. Chapter 2 describes the institutional and programmatic requirements, including roles and responsibilities. Tailoring of SE requirements and customizing SE practices are also addressed.

d. Chapter 3 describes the core set of common Agency-level technical processes and requirements for engineering NASA system products throughout the product life-cycle.

e. Chapter 4 describes the activities and requirements to be accomplished by assigned NASA technical teams or individuals (NASA employees and NASA support contractors) when performing technical oversight of a prime or other external contractor.

f. Chapter 5 describes the life-cycle and technical review requirements throughout the program and project life-cycles. Appendix G contains entrance/success criteria guidance for each of the reviews.

g. Chapter 6 describes the Systems Engineering Management Plan (SEMP), including the SEMP role, functions, and content. Appendix J of NASA/SP-2016-6105 provides details of a generic SEMP annotated outline.

2.1.1 General

The roles and responsibilities of senior management are defined in part in NPD 1000.0 and NPD 7120.4. The roles and responsibilities of program and project managers are defined in NPR 7120.5, NPR 7120.7, NPR 7120.8, NPR 8820.2, and other NASA directives. This NPR establishes SE processes and responsibilities.

2.1.1.1 For programs and projects involving more than one Center, the governing Mission Directorate or mission support office determines whether a Center executes a program/project in a lead role or in a supporting role. For Centers in supporting roles, compliance to this NPR should be jointly negotiated and documented in the lead Center's program/project SEMP or other equivalent program/project documentation along with approval through the lead Center's ETA process.

2.1.1.2 The roles and responsibilities associated with program and project management and Technical Authority (TA) are defined in the Program and Project Management NPRs (for example, NPR 7120.5 for space flight projects). Specific roles and responsibilities of the program/project manager and the ETA related to the SEMP are defined in Sections 2.1.6 and 6.2 of this NPR.

2.1.2 Office of the Chief Engineer (OCE)

2.1.2.1 The NASA Chief Engineer is responsible for policy, oversight, and assessment of the NASA engineering and program/project management process; implements the ETA process; and serves as principal advisor to the Administrator and other senior officials on matters pertaining to the Agency's technical capability and readiness to execute NASA programs and projects.

2.1.2.2 The NASA Chief Engineer provides overall leadership for the ETA process for programs and projects, including Agency engineering policy direction, requirements, and standards. The NASA Chief Engineer hears appeals of engineering decisions when they cannot be resolved at lower levels.

2.1.3 Mission Directorate or Headquarters Program Offices

2.1.3.1 The Mission Directorate Associate Administrator (MDAA) is responsible for establishing, developing, and maintaining the Programmatic Authority (i.e., policy and procedures, programs, projects, budgets, and schedules) in managing programs and projects within their Mission Directorate.

2.1.3.2 When programs and projects are managed at Headquarters or within Mission Directorates, that program office is responsible for the requirements in this NPR. Technical teams residing at Headquarters will follow the requirements of this NPR unless tailored by the governing organization and responsible ETA. The technical teams residing at Centers will follow Center-level process requirement documents.

2.1.3.3 The Office of the Chief Information Officer provides leadership, planning, policy direction, and oversight for the management of NASA information and NASA information technology (IT).

2.1.4 Center Directors

2.1.4.1 The Center Director is responsible for establishing, developing, and maintaining the Institutional Authority (e.g., processes and procedures, human capital, facilities, and infrastructure) required to execute programs and projects assigned to their Center. This includes:

a. Ensuring the Center is capable of accomplishing the programs, projects, and other activities assigned to it in accordance with Agency policy and the Center's best practices and institutional policies by establishing, developing, and maintaining institutional capabilities (processes and procedures, human capital—including trained/certified program/project personnel, facilities, and infrastructure) required for the execution of programs and projects.

b. Performing periodic program and project reviews to assess technical and programmatic progress to ensure performance in accordance with their Center's and the Agency requirements, procedures, processes, and other documentation.

c. Working with the Mission Directorate and the program and project managers, once assigned, to assemble the program/project team(s) and to provide needed Center resources.

d. Providing support and guidance to programs and projects in resolving technical and programmatic issues and risks.

2.1.4.2 The Center Director is responsible for developing the Center's ETA policies and practices consistent with Agency policies and standards. The Center Director is the Center ETA responsible for Center engineering design processes, specifications, rules, best practices, and other activities necessary to fulfill mission performance requirements for programs, projects, and/or major systems implemented by the Center. The Center Director delegates the Center ETA implementation responsibility to an individual in the Center's engineering leadership. The Center ETA supports processing changes to, and waivers or deviations from, requirements that are the responsibility of the ETA. This includes all applicable Agency and Center engineering directives, requirements, procedures, and standards.

Note: Centers may employ and tailor relevant government or industry standards that meet the intent of the requirements established in this NPR to augment or serve as the basis for their processes. A listing of endorsed technical standards is maintained on the NASA Technical Standards System under "Endorsed Standards" https://standards.nasa.gov/endorsed_standards.

2.1.4.3 [SE-01] through [SE-05] deleted.

Note: Rather than resequence the remaining requirements, the original requirement numbering was left intact in case Centers or other organizations refer to these requirement numbers in their flow-down requirement documents. Appendix J is provided to account for the deleted requirements. For each requirement that was deleted, the justification for its deletion is noted.

2.1.5 Technical Teams

2.1.5.1 Systems engineering is implemented by the technical team in accordance with the program/project SEMP or other equivalent program/project documentation. The makeup and organization of each technical team is the responsibility of each Center or program and includes all the personnel required to implement the technical aspects of the program/project.

2.1.5.2 The technical team, in conjunction with the Center's ETA, is responsible for completing the compliance matrix in Appendix H, capturing any tailoring, and including it in the SEMP or other equivalent program/project documentation.

2.1.5.3 For systems that contain software, the technical team ensures that software developed within NASA, or acquired from other entities, complies with NPR 7150.2.

a. NPR 7150.2 elaborates on the requirements in NPR 7123.1 and determines the applicability of requirements based on the Agency's software classification.

b. NPD 7120.4 contains additional Agency principles for the acquisition, development, maintenance, and management of software.

2.1.5.4 The technical team ensures that human systems integration activities, products, planning, and execution align with NASA/SP-2015-3709, Human Systems Integration (HSI) Practitioner's Guide.

2.1.6 Engineering Technical Authority

2.1.6.1 The ETA establishes and is responsible for the engineering design processes, specifications, rules, best practices, and other activities necessary to fulfill programmatic mission performance requirements. Centers delegate ETA to the level appropriate for the scope and size of the program/project, which may be Center engineering leadership or individuals. When ETA is used in this document, it refers generically to different levels of ETA.

2.1.6.2 ETAs or their delegates at the program or project level:

a. Serve as members of program or project control boards, change boards, and internal review boards.

b. Work with the Center management and other TA personnel to ensure that the quality and integrity of program or project processes, products, and standards of performance related to engineering, SMA, and health and medical reflect the level of excellence expected by the Center and the TA community.

c. Ensure that requests for waivers or deviations from ETA requirements are submitted to, and acted on, by the appropriate level of ETA.

d. Assist the program or project in making risk-informed decisions that properly balance technical merit, cost, schedule, and safety across the system.

e. Provide the program or project with the ETA view of matters based on their knowledge and experience and raise needed dissenting opinions on decisions or actions. (See Dissenting Opinion Sections of NPR 7120.5, NPR 7120.8, and NPR 7120.7.)

f. Serve as an effective part of NASA's overall system of checks and balances.

2.1.6.3 The ETA for the program or project leads and manages the system engineering activities. (Note that these responsibilities can be delegated by the ETA to Chief Engineer or other personnel as needed). A Center may have more than one engineering organization and delegates ETA to different areas as needed. The ETA may be delegated as appropriate to the size, complexity, and type of program/project. For example, ETA may be delegated to a line manager that is independent of the project for smaller projects or to the CIO for purely IT projects.

2.1.6.4 To support the program/project and maintain ETA independence and an effective check and balance system, the ETA:

a. Will seek concurrence by the program/project manager when a program/project-level ETA is appointed.

b. Cannot approve a request for a waiver or deviation from a non-technical derived requirement established by a Programmatic Authority.

c. May approve a request for a waiver or deviation from a technical derived requirement if he/she ensures that the appropriate independent Institutional Authority subject matter expert who is the steward for the involved technology, has concurred in the decision to approve the requirement waiver.

2.1.6.5 Although a limited number of individuals make up the ETA, their work is enabled by the contributions of the program's or project's working-level engineers and other supporting personnel (e.g., contracting officers). The working-level engineers do not have formally delegated Technical Authority and consequently may not serve in an ETA capacity. These engineers perform the detailed engineering and analysis for the program/project with guidance from their Center management and/or lead discipline engineers and support from the Center engineering infrastructure. They deliver the program/project products (e.g., hardware, software, designs, analysis, and technical alternatives) that conform to applicable programmatic, Agency, and Center requirements. They are responsible for raising issues to the program/project manager, Center engineering management, and/or the program/project ETA and are a key resource for resolving these issues.

2.1.6.6 Requirement [SE-06] concerning SEMP approval was moved to Section 6.1.8.

Tailoring can be differentiated from customizing as described in NASA/SP-2016-6105. Tailoring is removing requirements by use of waiver or deviation. Customizing is meeting the intent of the requirement through alternative approaches and does not require waivers or deviations.

2.2.1 Tailoring SE Requirements

2.2.1.1 SE requirements tailoring is the process used to seek relief from SE NPR requirements when that relief is consistent with program or project objectives, acceptable risk, and constraints.

2.2.1.2 The tailoring process (which can occur at any time in the program or project life cycle) results in deviations or waivers to requirements depending on the timing of the request (see Appendix A for definition of deviation and waiver).

2.2.1.3 The results of the program/project technical team's tailoring SE requirements from either this NPR, or a particular Center's implementation of this NPR, will be documented in the SEMP or other equivalent project documentation, along with supporting rationale that includes the risk evaluation, and documented approvals through the Center's ETA process.

2.2.2 Customizing SE Practices

2.2.2.1 Customizing is the adaptation of SE practices that are used to accomplish the SE requirements as appropriate to the size, complexity, and acceptable risk of the program/project.

2.2.2.2 Technical teams under the guidance of the project ETA are encouraged to customize these recommended SE practices so that the intent of the SE practice is being met in the most effective and efficient manner. The results of this customization do not require waivers or deviations but should be documented in the program/project SEMP or other equivalent program/project documentation.

2.2.3 Considerations for Tailoring or Customizing

Refer to NASA, SP-2016-6105 for examples of tailoring and customizing.

2.2.3.1 Considerations for tailoring or customizing should include but are not limited to:

a. Scope and visibility (e.g., organizations and partnerships involved, international agreements, amount of effort required).

b. Risk tolerance and failure consequences.

c. System size, functionality, and complexity (e.g., human space flight/flagship science vs. subscale technology demonstration).

d. Human involvement (e.g., human interfaces, critical crew (flight, ground) functions, interaction with, and control/oversight of (semi-) autonomous systems).

e. Impact on Agency IT security and national security.

f. Impact on other systems.

g. Longevity.

h. Serviceability (both ground and in-flight).

i. Constraints (including cost, schedule, degree of insight/oversight permitted with partnerships or international agreements).

j. Safety, quality, and mission assurance.

k. Current level of technology available.

l. Availability of industrial capacity.

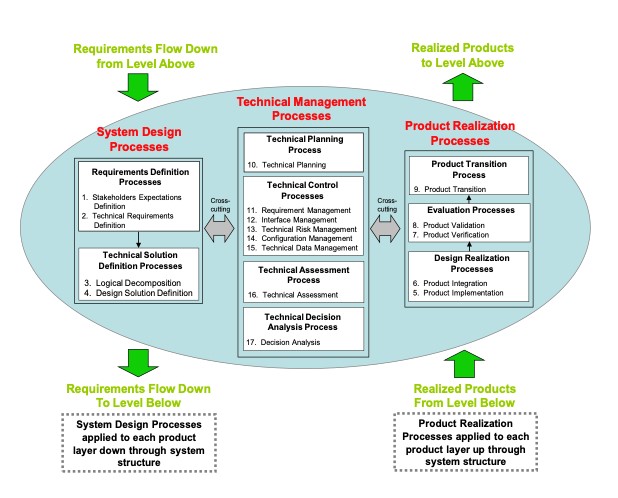

3.1.1 This chapter establishes the core set of common technical processes and requirements to be used by NASA programs or projects in engineering system products during all life-cycle phases to meet phase success criteria and program/project objectives. The 17 common technical processes are enumerated according to their description in this chapter and their interactions shown in Figure 3-1. This SE common technical processes model illustrates the use of:

a. System design processes for "top-down" design of each product in the system structure.

b. Product realization processes for "bottom-up" realization of each product in the system structure.

c. Cross-cutting technical management processes for planning, assessing, and controlling the implementation of the system design and product realization processes and to guide technical decision making (decision analysis).

3.1.2 The SE common technical processes model is referred to as an "SE engine" in this NPR to stress that these common technical processes are used to drive the development of the system products and associated work products required by management to satisfy the applicable product life-cycle phase success criteria while meeting stakeholder expectations within cost, schedule, and risk constraints.

3.1.3 This chapter identifies the following for each of the 17 common technical processes:

a. The specific requirement for Program/Project Managers to identify and implement (as defined in Section 3.2.1) the ETA-approved process.

b. A brief description of how the process is used as an element of the Systems Engineering Engine.

3.1.4 Typical practices for each process are identified in NASA/SP-2016-6105, where each process is described in terms of purpose, inputs, outputs, and activities. It should be emphasized that the practices documented in the handbook do not represent additional requirements that need to be executed by the technical team but provide best practices associated with the 17 common technical processes. As the technical team develops a tailored and customized approach for the application of these processes, sources of SE guidance and technical standards, such as NASA/SP-2016-6105 and endorsed industry standards, should be considered. Appendix I provides a list of NASA and endorsed military and industry standards applicable to Systems Engineering and available on the NASA Technical Standards System, found at https://standards.nasa.gov/endorsed_standards, and should be applied as appropriate for each program or project. For additional guidance on mapping HSI into the SE Engine, refer to NASA/SP-2015-3709, Section 3.0.

Figure 3 1 - Systems Engineering (SE) Engine

3.1.5 The context in which the common technical processes are used is provided below: (Refer to "The Common Technical Processes and the SE Engine" in NASA/SP-2016-6105 for further information.)

3.1.5.1 The common technical processes are applied to each product layer to concurrently develop the products that will satisfy the operational or mission functions of the system (end products) and that will satisfy the life-cycle support functions of the system (enabling products). In this document, a product layer is a horizontal slice of the product breakdown hierarchy and includes both the end product and its associated enabling products. The enabling products facilitate the activities of system design, product realization, operations and mission support, sustainment, and end-of-product-life disposal or recycling by having the products and services available when needed.

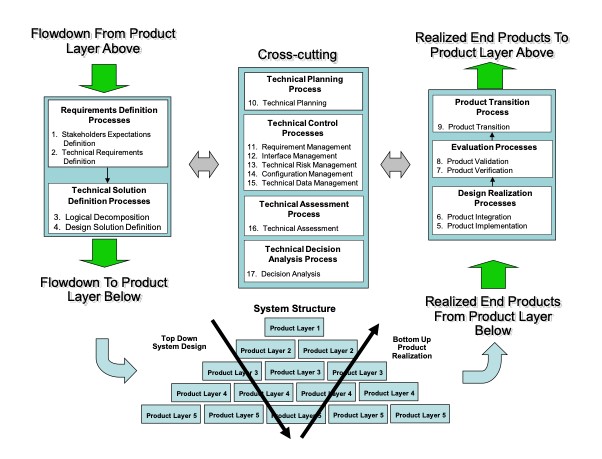

3.1.5.2 The common technical processes are applied to design a system solution definition for each product layer down and across each level of the system structure and to realize the product layer end products up and across the system structure. Figure 3-2 illustrates how the three major sets of processes of the Systems Engineering (SE) Engine (system design processes, product realization processes, and technical management processes) are applied to each product layer within a system structure.

Figure 3-2 - Application of SE Engine Common Technical Processes

Within System Structure

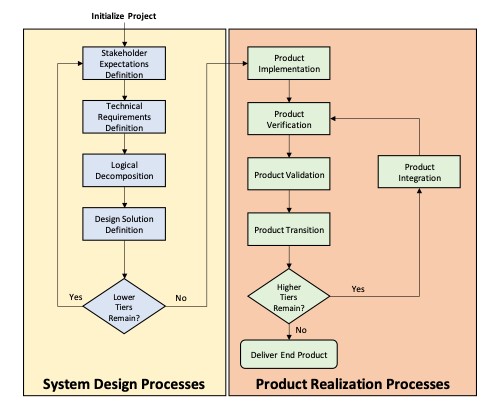

3.1.5.3 The common technical processes are used to define the product layers of the system structure in each applicable phase of the relevant life-cycle to generate work products and system products needed to satisfy the success criteria of the applicable phase. Figure 3-3 depicts the sequencing of the processes.

Figure 3-3 - Sequencing of the Common Technical Processes

3.1.5.4 There are four system design processes applied to each product-based product layer from the top to the bottom of the system structure:

a. Stakeholder Expectation Definition.

b. Technical Requirements Definition.

c. Logical Decomposition.

d. Design Solution Definition. (See Figure 3-1 and Figure 3-2.)

3.1.5.5 During the application of these four processes to a product layer, it is expected that there will be a need to apply activities from other processes yet to be completed and to repeat process activities already performed to arrive at an acceptable set of requirements and solutions. There also will be a need to interact with the technical management processes to aid in identifying and resolving issues and making decisions between alternatives. For software products, the technical team ensures that the process executions comply with NPR 7150.2, software design requirements. The technical team also ensures that human capabilities and limitations are understood and how those human capabilities or limitations impact the hardware and software of any given system in terms of design. Refer to NASA/SP-2015-3709.

3.1.5.6 There are five product realization processes. Four of the product realization processes are applied to each end product of a product layer from the bottom to the top of the system structure:

a. Either Product Implementation for the lowest level or Product Integration for subsequent levels.

b. Product Verification.

c. Product Validation.

d. Product Transition. (See Figure 3-1 and Figure 3-2.)

3.1.5.7 The form of the end product realized will depend on the applicable product life-cycle phase, location within the system structure of the product layer containing the end product, and the success criteria of the phase. Typical early phase products are reports, models, simulations, mockups, prototypes, or demonstrators. Typical later phase products may take the form of qualification units, final mission products, and fully assembled payloads and instruments.

3.1.5.8 There are eight technical management processes—Technical Planning, Technical Requirements Management, Interface Management, Technical Risk Management, Configuration Management, Technical Data Management, Technical Assessment, and Decision Analysis. (See Figure 3-1 and Figure 3-2.) These technical management processes supplement the program and project management directives (e.g., NPR 7120.5), which specify the technical activities for which program and project managers are responsible.

3.1.5.9 Note that during the design and realization phases of a project, all 17 processes are used. After the end product is developed and placed into operations the Technical Management processes in the center chamber of the SE Engine will continue to be employed. For more information on the use of the SE Engine during the operational phase, refer to NASA/SP-2016-6105.

3.1.5.10 The common technical processes are applied by assigned technical teams and individuals trained in the requirements of this NPR.

3.1.5.11 The assigned technical teams and individuals use the appropriate and available sets of tools and methods to accomplish required common technical process activities. This includes the use of modeling and simulation as applicable to the product phase, location of the product layer in the system structure, and the applicable phase success criteria.

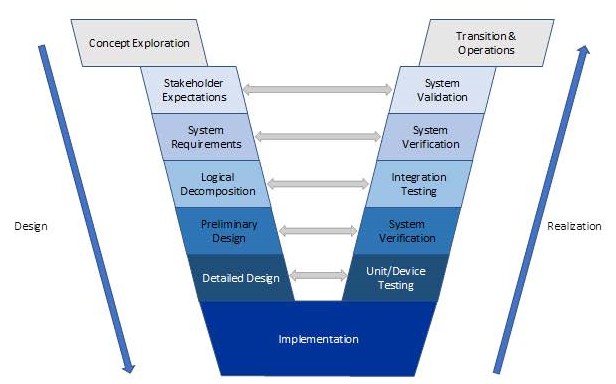

3.1.6 Relationship of the SE Engine to the SE Vee.

The NASA SE Engine is a highly versatile representation of the core SE processes necessary to properly engineer a system. It can be used for any type of life-cycle including waterfall, spiral, and agile. It allows for use in very simple to highly complex systems. The NASA SE Engine had its heritage in a classic SE Vee, and if being used for a simple one-pass waterfall-type life-cycle, the right and left chambers of the engine can be represented as shown in Figure 3-4. For a more detailed description of how the SE Engine evolved from the SE Vee, refer to the NASA Systems Engineering Handbook.

Figure 3-4 - SE Engine Implemented for a Simple Single-Pass Waterfall-Type Life Cycle

3.2.1 For Section 3.2, "identify" means to either use an approved process or a customized process that is approved by the ETA or their delegate. "Implement" includes documenting and communicating the approved process, providing resources to execute the process, providing training on the process, and monitoring and controlling the process. The technical team is responsible for the execution of these 17 required processes per Section 2.1.5.

3.2.2 Stakeholder Expectations Definition Process

3.2.2.1 Program/Project Managers shall identify and implement an ETA-approved Stakeholder Expectations Definition process to include activities, requirements, guidelines, and documentation, as tailored and customized for the definition of stakeholder expectations for the applicable product layer [SE-07].

3.2.2.2 The Stakeholder Expectations Definition process is used to elicit and define use cases, scenarios, concept of operations, and stakeholder expectations for the applicable product life-cycle phases and product layer. This includes expectations such as:

a. Operational end products and life-cycle-enabling products of the product layer.

b. Affordability.

c. Operator or user interfaces.

d. Expected skills and capabilities of operators or users.

e. Expected number of simultaneous users.

f. System and human performance criteria.

g. Technical authority, standards, regulations, and laws.

h. Factors such as health and medical, safety, planetary protection, orbital debris, quality, cybersecurity, context of use by humans, reliability, availability, maintainability, electromagnetic compatibility, interoperability, testability, transportability, supportability, usability, and disposability.

i. For crewed missions, crew health and performance capabilities and limitations, risk posture, crew survivability, and system habitability.

j. Local management constraints on how work will be done (e.g., operating procedures).

3.2.2.3 The baselined stakeholder expectations are used for validation of the product layer end product during product realization. At this point, Measures of Effectiveness (MOEs) are defined. For more information of MOEs refer to NASA/SP-2016-6105, NASA Systems Engineering Handbook.

3.2.3 Technical Requirements Definition Process

3.2.3.1 Program/Project Managers shall identify and implement an ETA-approved Technical Requirements Definition process to include activities, requirements, guidelines, and documentation, as tailored and customized for the definition of technical requirements from the set of agreed upon stakeholder expectations for the applicable product layer [SE-08].

3.2.3.2 The technical requirements definition process is used to transform the baselined stakeholder expectations into unique, quantitative, and measurable technical requirements expressed as "shall" statements that can be used for defining a design solution for the product layer end product and related enabling products. This process also includes validation of the requirements to ensure that the requirements are well-formed (clear and unambiguous), complete (agrees with customer and stakeholder needs and expectations), consistent (conflict free), and individually verifiable and traceable to a higher level requirement or goal. As part of this process, Measures of Performance (MOPs) and Technical Performance Measures (TPMs) are defined. For more information of MOPs and TPMs, refer to NASA/SP-2016-6105, NASA Systems Engineering Handbook.

3.2.4 Logical Decomposition Process

3.2.4.1 Program/Project Managers shall identify and implement an ETA-approved Logical Decomposition process to include activities, requirements, guidelines, and documentation, as tailored and customized for logical decomposition of the validated technical requirements of the applicable product layer [SE-09].

3.2.4.2 The logical decomposition process is used to improve understanding of the defined technical requirements and the relationships among the requirements (e.g., functional, behavioral, performance, and temporal) and to transform the defined set of technical requirements into a set of logical decomposition models and their associated set of derived technical requirements for lower levels of the system and for input to the design solution definition process.

3.2.5 Design Solution Definition Process

3.2.5.1 Program/Project Managers shall identify and implement an ETA-approved Design Solution Definition process to include activities, requirements, guidelines, and documentation, as tailored and customized for designing product solution definitions within the applicable product layer that satisfy the derived technical requirements [SE-10].

3.2.5.2 The Design Solution Definition process is used to translate the outputs of the logical decomposition process into a design solution definition that is in a form consistent with the product life-cycle phase and product layer location in the system structure and that will satisfy phase success criteria. This includes transforming the defined logical decomposition models and their associated sets of derived technical requirements into alternative solutions, then analyzing each alternative to be able to select a preferred alternative and fully defining that alternative into a final design solution definition that will satisfy the requirements.

3.2.5.3 These design solution definitions will be used for generating end products, either by using the product implementation process or product integration process, as a function of the position of the product layer in the system structure and whether there are additional subsystems of the end product that need to be defined. The output definitions from the design solution (end product specifications) will be used for conducting product verification.

3.2.6 Product Implementation Process

3.2.6.1 Program/Project Managers shall identify and implement an ETA-approved Product Implementation process to include activities, requirements, guidelines, and documentation, as tailored and customized for implementation of a design solution definition by making, buying, or reusing an end product of the applicable product layer [SE-11].

3.2.6.2 The Product Implementation Process is used to generate a specified product of a product layer through buying, making, or reusing in a form consistent with the product life-cycle phase success criteria and that satisfies the design solution definition (e.g., drawings, specifications).

3.2.7 Product Integration Process

3.2.7.1 Program/Project Managers shall identify and implement an ETA-approved Product Integration process to include activities, requirements, guidelines, and documentation, as tailored and customized for the integration of lower level products into an end product of the applicable product layer in accordance with its design solution definition [SE-12].

3.2.7.2 The Product Integration Process is used to transform lower level, verified and validated end products into the desired end product of the higher level product layer through assembly and integration.

3.2.8 Product Verification Process

3.2.8.1 Program/Project Managers shall identify and implement an ETA-approved Product Verification process to include activities, requirements/specifications, guidelines, and documentation, as tailored and customized for verification of end products generated by the product implementation process or product integration process against their design solution definitions [SE-13].

3.2.8.2 The Product Verification process is used to demonstrate that an end product generated from product implementation or product integration conforms to its requirements as a function of the product life-cycle phase and the location of the product layer end product in the system structure. Special attention is given to demonstrating satisfaction of the MOPs defined for each MOE during performance of the technical requirements definition process.

3.2.9 Product Validation Process

3.2.9.1 Program/Project Managers shall identify and implement an ETA-approved Product Validation process to include activities, requirements, guidelines, and documentation, as tailored and customized for validation of end products generated by the product implementation process or product integration process against their stakeholder expectations [SE-14].

3.2.9.2 The Product Validation process is used to confirm that a verified end product generated by product implementation or product integration fulfills (satisfies) its intended use when placed in its intended environment and to ensure that any anomalies discovered during validation are appropriately resolved prior to delivery of the product (if validation is done by the supplier of the product) or prior to integration with other products into a higher level assembled product (if validation is done by the receiver of the product). The validation is done against the set of baselined stakeholder expectations. Special attention should be given to demonstrating satisfaction of the MOEs identified during performance of the stakeholder expectations definition process. The type of product validation is a function of the form of the product and product life-cycle phase and in accordance with an applicable customer agreement.

3.2.10 Product Transition Process

3.2.10.1 Program/Project Managers shall identify and implement an ETA-approved Product Transition process to include activities, requirements, guidelines, and documentation, as tailored and customized for transitioning end products to the next higher level product layer customer or user [SE-15].

3.2.10.2 The Product Transition process is used to transition a verified and validated end product that has been generated by product implementation or product integration to the customer at the next level in the system structure for integration into an end product or, for the top-level end product, transitioned to the intended end user. The form of the product transitioned will be a function of the product life-cycle phase and the location within the system structure of the product layer in which the end product exists.

3.2.11 Technical Planning Process

3.2.11.1 Program/Project Managers shall identify and implement an ETA-approved Technical Planning process to include activities, requirements, guidelines, and documentation, as tailored and customized for planning the technical effort [SE-16].

3.2.11.2 The Technical Planning process is used to plan for the application and management of each common technical process, including tailoring of organizational requirements and requirements specified in this NPR. It is also used to identify, define, and plan the technical effort applicable to the product life-cycle phase for product layer location within the system structure and to meet program/project objectives and product life-cycle phase success criteria. A key document generated by this process is the SEMP (See Chapter 6).

3.2.12 Requirements Management Process

3.2.12.1 Program/Project Managers shall identify and implement an ETA-approved Requirements Management process to include activities, requirements, guidelines, and documentation, as tailored and customized for management of requirements throughout the system life-cycle [SE-17].

3.2.12.2 The Requirements Management process is used to:

a. Manage the product requirements identified, baselined, and used in the definition of the product layer products during system design.

b. Provide bidirectional traceability back to the top product layer requirements.

c. Manage the changes to established requirement baselines over the life-cycle of the system products.

3.2.13 Interface Management Process

3.2.13.1 Program/Project Managers shall identify and implement an ETA-approved Interface Management process to include activities, requirements, guidelines, and documentation, as tailored and customized for management of the interfaces defined and generated during the application of the system design processes [SE-18].

3.2.13.2 The Interface Management process is used to:

d. Establish and use formal interface management to assist in controlling system product development efforts when the efforts are divided between Government programs, contractors, and/or geographically diverse technical teams within the same program or project.

e. Maintain interface definition and compliance among the end products and enabling products that compose the system, as well as with other systems with which the end products and enabling products will interoperate.

3.2.14 Technical Risk Management Process

3.2.14.1 Program/Project Managers shall identify and implement an ETA-approved Technical Risk Management process to include activities, requirements, guidelines, and documentation, as tailored and customized for management of the risk identified during the technical effort [SE-19].

3.2.14.2 The Technical Risk Management process is used to make risk-informed decisions and examine, on a continuing basis, the potential for deviations from the program/project plan and the consequences that could result should they occur. This enables risk-handling activities to be planned and invoked as needed across the life of the program or project to mitigate impacts on achieving product life-cycle phase success criteria and meeting technical objectives. The technical team supports the development of potential health and medical, safety, cost, and schedule impacts for identified technical risks and any associated mitigation strategies. NPR 8000.4, Agency Risk Management Procedural Requirements, is to be used as a source document for defining this process and NPR 8705.5, Technical Probabilistic Risk Assessment (PRA) Procedures for Safety and Mission Success for NASA Programs and Projects, provides one means of identifying and assessing technical risk. While the focus of this process is the management of technical risk, the highly interdependent nature of health and medical, safety, technical, cost, and schedule risks require the broader program/project team to consistently address risk management with an integrated approach. NASA/SP-2011-3422, NASA Risk Management Handbook, provides guidance for managing risk in an integrated fashion.

3.2.15 Configuration Management Process

3.2.15.1 Program/Project Managers shall identify and implement an ETA-approved Configuration Management process to include activities, requirements, guidelines, and documentation, as tailored and customized for configuration management [SE-20].

3.2.15.2 The Configuration Management process for end products, enabling products, and other work products placed under configuration control is used to:

a. Identify the items to be placed under configuration control.

b. Identify the configuration of the product or work product at various points in time.

c. Systematically control changes to the configuration of the product or work product.

d. Maintain the integrity and traceability of the configuration of the product or work product throughout its life.

e. Preserve the records of the product or end product configuration throughout its life-cycle, dispositioning them in accordance with NPR 1441.1, NASA Records Management Program Requirements.

3.2.16 Technical Data Management Process

3.2.16.1 Program/Project Managers shall identify and implement an ETA-approved Technical Data Management process to include activities, requirements, guidelines, and documentation, as tailored and customized for management of the technical data generated and used in the technical effort [SE-21].

3.2.16.2 The Technical Data Management Process is used to plan for, acquire, access, manage, protect, and use data of a technical nature to support the total life-cycle of a system. This process is used to capture trade studies, cost estimates, technical analyses, reports, and other important information.

3.2.17 Technical Assessment Process

3.2.17.1 Program/Project Managers shall identify and implement an ETA-approved Technical Assessment process to include activities, requirements, guidelines, and documentation, as tailored and customized for making assessments of the progress of planned technical effort and progress toward requirements satisfaction [SE-22].

3.2.17.2 The Technical Assessment process is used to help monitor progress of the technical effort and provide status information for support of the system design, product realization, and technical management processes. A key aspect of the technical assessment process is the conduct of life-cycle and technical reviews throughout the system life-cycle in accordance with Chapter 5.

3.2.18 Decision Analysis Process

3.2.18.1 Program/Project Managers shall identify and implement an ETA-approved Decision Analysis process to include activities, requirements, guidelines, and documentation, as tailored and customized for making technical decisions [SE-23].

3.2.18.2 The Decision Analysis process, including processes for identification of decision criteria, identification of alternatives, analysis of alternatives, and alternative selection, is applied to technical issues to support their resolution. It considers relevant data (e.g., engineering performance, quality, and reliability) and associated uncertainties. Decision analysis is used throughout the system life-cycle to formulate candidate decision alternatives and evaluate their impacts on health and medical, safety, technical, cost, and schedule performance. NASA/SP-2010-576, NASA Risk-Informed Decision Making Handbook, provides guidance for analyzing decision alternatives in a risk-informed fashion.

4.1.1 Work contracted in support of programs and projects is critical to mission success. Inputs or requirements in support of a solicitation (such as Requests for Proposals (RFP)) typically include a Statement of Work, product requirements, Independent Government Estimate, Data Requirements List, Deliverables List, and Surveillance Plan. These should be developed considering the risk posture of the program/project and fit within the cost and schedule constraints. In addition to developing the product requirements, a critical aspect of the solicitation is for the technical team to define the insight and oversight requirements. "Insight" is a monitoring activity, whereas "oversight" is an exercise of authority by the Government. The Federal Acquisition Regulation and the NASA Supplement to the Federal Acquisition Regulation govern the acquisition planning, contract formation, and contract administration process. Authority to interface with the contractor can be delegated only by the contracting officer. The activities listed in Section 4.2 will be coordinated with the cognizant contracting officer. Detailed definitions for insight and oversight are provided in 48 CFR, sbpt. 1846.4. As stated in Section 1.1.3, the requirements should be appropriately tailored and customized for system/product size, complexity, criticality, acceptable risk posture, and architectural level.

4.1.2 This chapter defines a minimum set of technical activities and requirements for a NASA program/project technical team to perform before contract award, during contract performance, and upon completion of the contract on program/projects. These activities and requirements are intended to supplement the common technical process activities and requirements of Chapter 3 and thus enhance the outcome of the contracted effort and ensure the required integration between work performed by the contractor and the program or project.

4.2.1 The NASA technical team shall define the engineering activities for the periods before contract award, during contract performance, and upon contract completion in the SEMP or other equivalent program/project documentation [SE-24].

4.2.2 The content of Appendix J of NASA/SP-2016-6105 should be used as a guide in the development of the SEMP or other equivalent program/project documentation.

4.2.3 The NASA technical team shall establish the technical inputs to the solicitation appropriate for the product(s) to be developed, including product requirements and Statement of Work tasks [SE-25].

4.2.3.1 The technical team uses knowledge of the 17 common technical processes to identify products and desired practices to include in the solicitation.

4.2.4 The NASA technical team shall determine the technical work products to be delivered by the offeror or contractor, to include contractor documentation that specifies the contractor's SE approach to the scope of activities described by the 17 common technical processes [SE-26].

4.2.5 The NASA technical team shall provide the requirements for technical insight and oversight activities planned in the NASA SEMP or other equivalent program/project documentation to the contracting officer for inclusion in the solicitation [SE-27].

4.2.6 Care should be taken that no requirements or solicitation information is divulged prior to the release of the solicitation.

4.2.7 The NASA technical team shall participate in the evaluation of offeror proposals in accordance with applicable NASA and Center source selection procedures [SE-28].

4.2.7.1 This requirement ensures that the proposal addresses the requirements, products, and processes specified in the solicitation.

4.3.1 The NASA technical team, under the authority of the contracting officer, shall perform the technical insight and oversight activities established in the contract including modifications to the original contract [SE-29].

4.3.2 The requirements levied on the technical team in Section 4.2 for establishing the contract applies to any modifications or additions to the original contract.

4.4.1 The NASA technical team shall participate in the review(s) to finalize Government acceptance of the deliverables [SE-30].

4.4.2 The NASA technical team shall participate in product transition as defined in the NASA SEMP or other equivalent program/project documentation [SE-31].

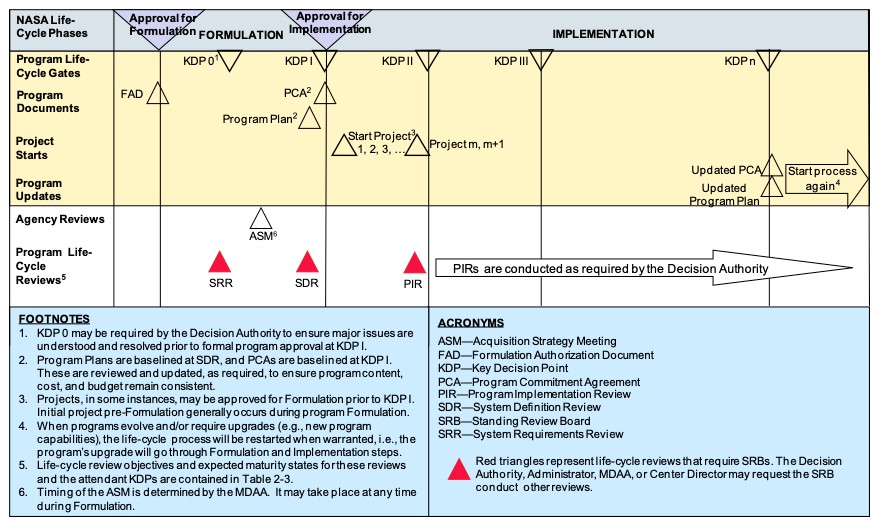

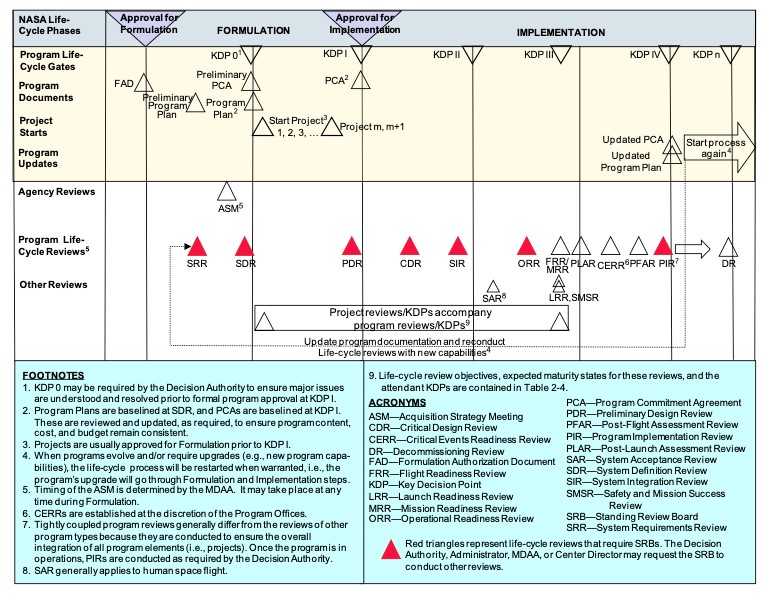

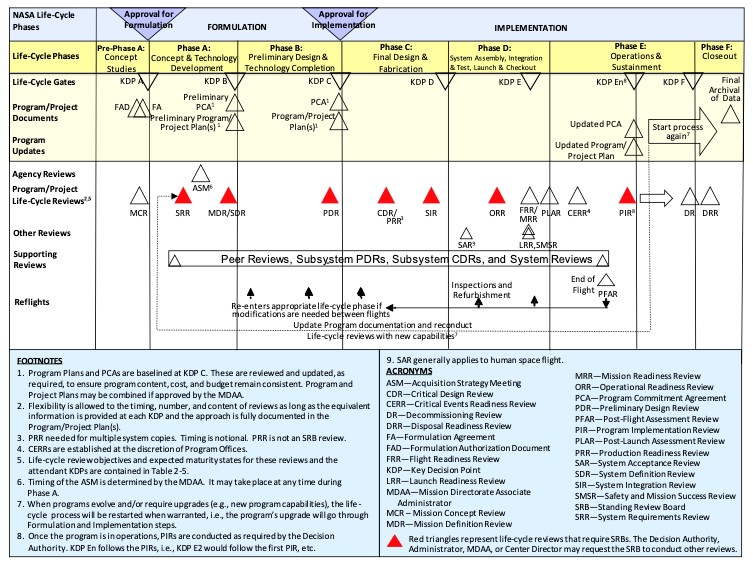

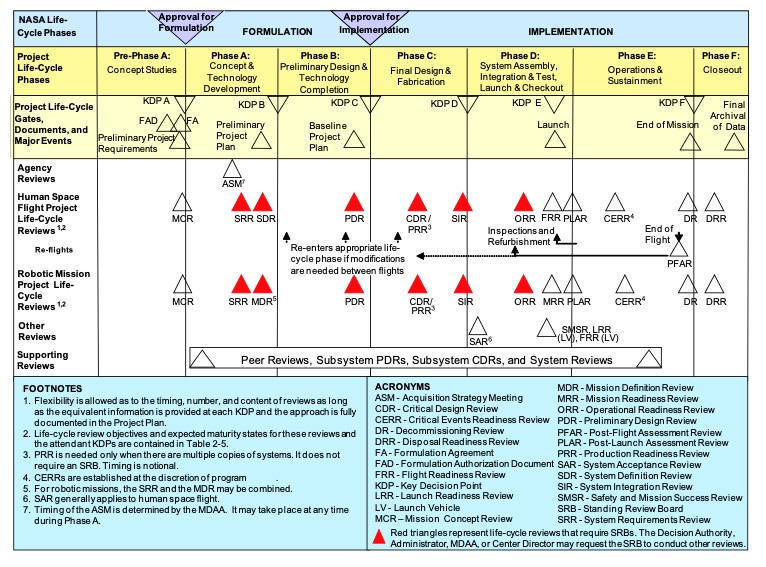

5.1.1 NPR 7120.5 defines four types of programs that may contain projects:

a. Uncoupled programs.

b. Loosely coupled programs.

c. Tightly coupled programs.

d. Single-project programs.

5.1.1.1 Which life-cycle a program/project uses will be dependent on what type of program/project it is and whether the program/project is producing products for space flight, advanced technology development, information technology, infrastructure, or other applications.

5.1.1.2 A specific life-cycle may be required by associated project management NPRs. For example, NPR 7120.5 defines the life-cycles for space flight programs and projects, and NPR 7120.7 defines life-cycles for IT. For Announcement of Opportunity (AO) driven projects, refer to NPR 7120.5, Section 2.2.7.1. For purposes of illustration, life-cycles from NPR 7120.5 are repeated here in Figures 5-1 through 5-4.

5.1.2 The application of the common technical processes within each life-cycle phase produces technical results and work products that provide inputs to life-cycle and technical reviews and support informed management decisions for progressing to the next life-cycle phase.

5.1.3 Each program and project will perform the life-cycle reviews as required by or tailored in accordance with their governing program/project management NPR, applicable Center policies and procedures, and the requirements of this document. These reviews provide a periodic assessment of a program or project's technical and programmatic status and health at key points in the life-cycle. The technical team provides the technical inputs to be incorporated into the overall program/project review package. Appendix G provides guidelines for the entrance and success criteria for each of these reviews with a focus on the technical products. Additional programmatic work products may also be required by the governing program/project NPR. Programs/projects are expected to tailor the reviews and customize the entrance/success criteria as appropriate to the size/complexity and unique needs of their activities. Approved tailoring is captured in the SEMP or other equivalent program/project documents.

5.1.4 The progress between life-cycle phases is marked by key decision points (KDPs). At each KDP, management examines the maturity of the technical aspects of the program/project. For example, management evaluates the adequacy of the resources (staffing and funding) allocated to the planned technical effort, the technical maturity of the product, the management of technical and nontechnical internal issues and risks, and the responsiveness to any changes in stakeholder expectations. If the technical and management aspects of the program/project are satisfactory, including the implementation of corrective actions, then the program/project can be approved by the designated Decision Authority to proceed to the next phase. Program and project management NPRs (NPR 7120.5, NPR 7120.7, and NPR 7120.8) contain further details relating to life-cycle progress.

Note: For example only. Refer to Figure 2-2 in NPR 7120.5 for the official life cycle. Table 2-3 reference in Footnote 5 above is in NPR 7120.5.

Figure 5 1 - NASA Uncoupled and Loosely Coupled Program Life-Cycle

Note: For example only. Refer to Figure 2-3 in NPR 7120.5 for the official life cycle. Table 2-4 reference in Footnote 5 above is in NPR 7120.5.

Figure 5-2 - NASA Tightly Coupled Program Life-Cycle

Note: For example only. Refer to Figure 2-4 in NPR 7120.5 for the official life cycle. Table 2-5 reference in Footnote 5 above is in NPR 7120.5.

Figure 5-3 - NASA Single-Project Program Life-Cycle

Note: For example only. Refer to Figure 2-5 in NPR 7120.5 for the official life cycle. Table 2-5 reference in Footnote 2 above is in NPR 7120.5.

Figure 5 4 - The NASA Project Life-Cycle

5.1.5 Life-cycle reviews are event based and occur when the entrance criteria for the applicable review are satisfied. (Appendix G provides guidance.) They occur based on the maturity of the relevant technical baseline as opposed to calendar milestones (e.g., the quarterly progress review, the yearly summary).

5.1.6 Accurate assessment of technology maturity is critical to technology advancement and its subsequent incorporation into operational products. The program/project ensures that Technology Readiness Levels (TRLs) and/or other measures of technology maturity are used to assess maturity throughout the life-cycle of the program/project. When other measures of technology maturity are used, they should be mapped back to TRLs. The definition of the TRLs for hardware and software are defined in Appendix E. Moving to higher levels of technology maturity requires an assessment of a range of capabilities for design, analysis, manufacture, and test. Measures for assessing technology maturity are described in NASA/SP-2016-6105. The initial technology maturity assessment is done in the Formulation phase and updated at program/project status reviews. The program/project approach for maturing and assessing technology is typically captured in a Technology Development Plan, the SEMP, or other equivalent program/project documentation.

5.2.1 Planning

5.2.1.1 The technical team shall develop and document plans for life-cycle and technical reviews for use in the program/project planning process [SE-32].

5.2.1.2 The life-cycle and technical review schedule, as documented in the SEMP or other equivalent program/project documentation, will be reflected in the overall program/project plan. The results of each life-cycle and technical review will be used to update the technical review plan as part of the SEMP (or other equivalent program/project documentation) update process. The review plans, data, and results should be maintained and dispositioned as Federal Records.

5.2.1.3 The technical team ensures that system aspects interfacing with crew or human operators (e.g., users, maintainers, assemblers, and ground support personnel) are included in all life-cycle and technical reviews and that HSI requirements are implemented. Additional HSI guidance is provided in NASA/SP-2015-3709 and NASA/SP-2016-6105/SUPPL Expanded Guidance for NASA Systems Engineering Volumes 1 and 2.

5.2.1.4 The technical team ensures that system aspects represented or implemented in software are included in all life-cycle and technical reviews and that all software review requirements are implemented. Software review requirements are provided in NPR 7150.2, with guidance provided in NASA-HDBK-2203, NASA Software Engineering Handbook.

5.2.1.5 The technical team shall participate in the life-cycle and technical reviews as indicated in the governing program/project management NPR [SE-33]. Additional description of technical reviews is provided in NASA/SP-2016-6105, NASA Systems Engineering Handbook and in NASA/SP-2014-3705, NASA Spaceflight Program & Project Management Handbook. (For requirements on program and project life cycles and management reviews, see the appropriate NPR, e.g., NPR 7120.5.)

5.2.2 Conduct

5.2.2.1 The technical team shall participate in the development of entrance and success criteria for each of the respective reviews [SE-34]. The technical team should utilize the guidance defined in Appendix G as well as Center best practices for defining entrance and success criteria.

5.2.2.2 The technical team shall provide the following minimum products at the associated life-cycle review, at the indicated maturity level. If the associated life-cycle review is not held, the technical team will need to seek a waiver or deviation to tailor these requirements. If the associated life-cycle review is held but combined with other life-cycle reviews or resequenced, this is considered customization and therefore no waiver is required (but approach should still be documented in the SEMP or Review Plan for clarity).

a. Mission Concept Review (MCR):

(1) Baselined stakeholder identification and expectation definitions [SE-35].

(2) Baselined concept definition [SE-36].

(3) Approved MOE definition [SE-37].

b. System Requirements Review (SRR):

(1) Baselined SEMP (or other equivalent program/project documentation) for projects, single-project programs, and one-step AO programs [SE-38].

(2) Baselined requirements [SE-39].

c. Mission Definition Review/System Definition Review (MDR/SDR):

(1) Approved TPM definitions [SE-40].

(2) Baselined architecture definition [SE-41].

(3) Baselined allocation of requirements to next lower level [SE-42].

(4) Initial trend of required leading indicators [SE-43].

(5) Baseline SEMP (or other equivalent program/project documentation) for uncoupled, loosely coupled, tightly coupled, and two-step AO programs [SE-44].

d. Preliminary Design Review (PDR):

(1) Preliminary design solution definition [SE-45].

e. Critical Design Review (CDR):

(1) Baseline detailed design [SE-46].

f. System Integration Review (SIR):

(1) Updated integration plan [SE-47].

(2) Preliminary Verification and Validation (V&V) results [SE-48].

g. Operational Readiness Review (ORR):

(1) [SE-49] deleted.

(2) [SE-50] deleted.

(3) Preliminary decommissioning plans [SE-51].

h. Flight Readiness Review (FRR):

(1) Baseline disposal plans [SE-52].

(2) Baseline V&V results [SE-53].

(3) Final certification for flight/use [SE-54].

i. Decommissioning Review (DR):

(1) Baseline decommissioning plans [SE-55].

j. Disposal Readiness Review (DRR):

(1) Updated disposal plans [SE-56].

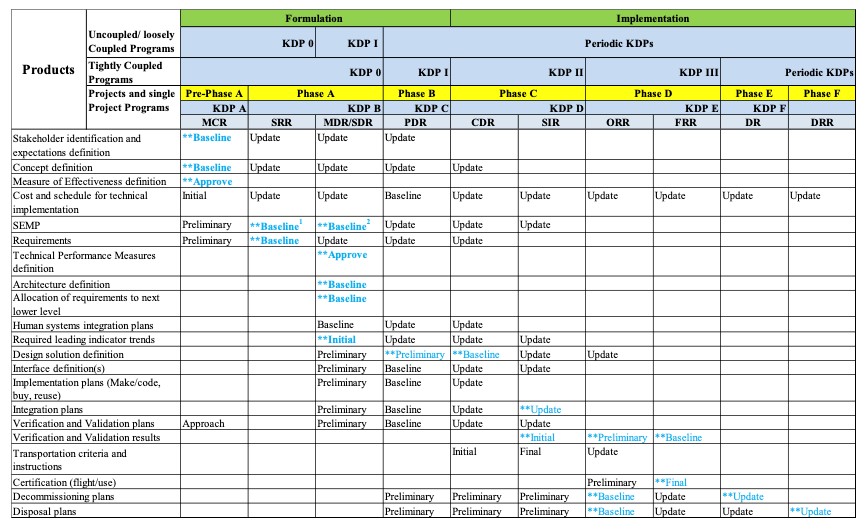

5.2.2.3 Table 5-1 shows the maturity of primary SE work products at the associated life-cycle reviews for all types and sizes of programs/projects. The required SE products identified above are notated with "**" in the table. For further description of the primary SE work products, refer to Appendix G. For additional guidance on software product maturity for program/project life-cycle reviews, refer to NASA-HDBK-2203. Additional programmatic work products are required by the governing program/project management NPRs, but not listed herein.

5.2.2.4 The expectation for work products identified as "baselined" in Section 5.2.2.2 and Table 5-1 is that they will be at least final drafts going into the designated life-cycle review. Subsequent to the review, the final draft will be updated in accordance with approved review comments, Review Item Discrepancies (RID), or Requests for Action (RFA) and formally baselined.

5.2.2.5 Terms for maturity levels of technical work products identified in this section are addressed in detail in Appendix F.