![[NASA Logo]](../Images/nasaball.gif)

![[NASA Logo]](../Images/nasaball.gif) |

NASA Procedures and Guidelines |

This Document is Obsolete and Is No Longer Used.

|

|

P.1 Purpose

P.2 Applicability

P.3 Authority

P.4 Applicable Documents and Forms

P.5 Measurement/Verification

P.6 Cancellation

1.1 Background

1.2 Framework for Systems Engineering Procedural Requirements

1.3 Framework for Systems Engineering Capability

1.4 Document Organization

2.1 Roles and Responsibilities

2.2 Tailoring and Customization

3.1 Introduction

3.2 Requirements for the Common Technical Processes

4.1 Introduction

4.2 Activities Prior to Contract Award

4.3 During Contract Performance

4.4 Contract Completion

5.1 Life Cycle

5.2 Life-cycle and Technical Review Requirements

5.3 Completion of Life-cycle Reviews

6.1 Systems Engineering Management Plan Function

6.2 Roles and Responsibilities

H.1 Compliance Matrix for Centers

H.2 Compliance Matrix for Programs/Projects

Figure 1-1 - Hierarchy of Related Documents

Figure 1-2 - Documentation Relationships

Figure 1 3 - SE Framework

Figure 3 1 - Systems Engineering (SE) Engine

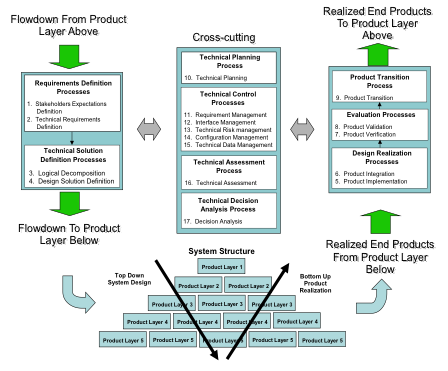

Figure 3-2 - Application of SE Engine Common Technical Processes Within System Structure

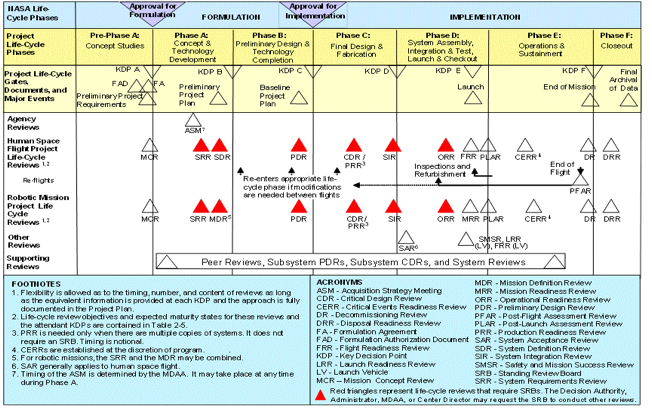

Figure 5 1 - NASA Uncoupled and Loosely Coupled Program Life Cycle

Figure 5-2 - NASA Tightly Coupled Program Life Cycle

Figure 5-3 - NASA Single-Project Program Life Cycle

Figure 5 4 - The NASA Project Life Cycle

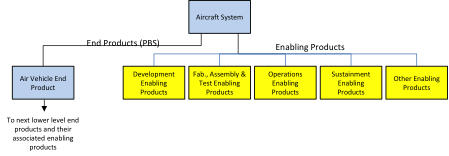

Figure A-1 - Enabling Product Relationship to End Products

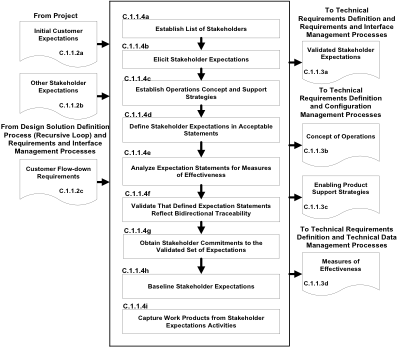

Figure C 1 - Stakeholder Expectation Definition Process

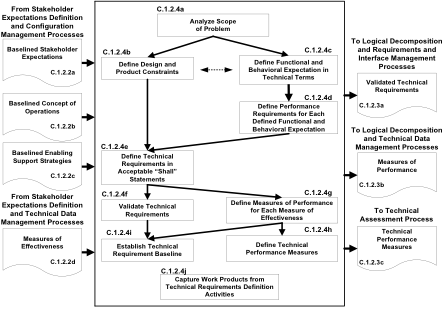

Figure C 2 - Technical Requirements Definition Process

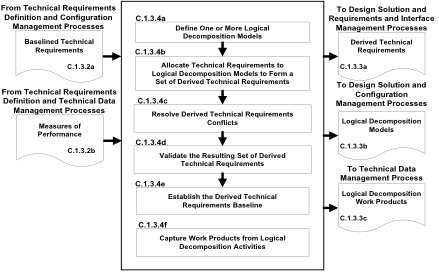

Figure C 3 - Logical Decomposition Process

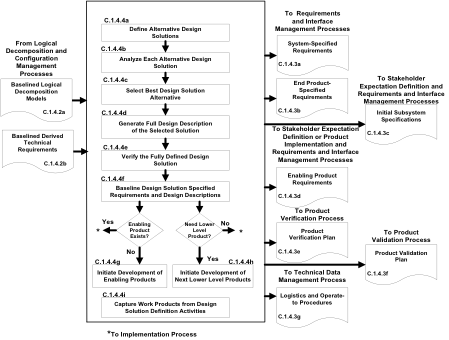

Figure C-4 - Design Solution Definition Process

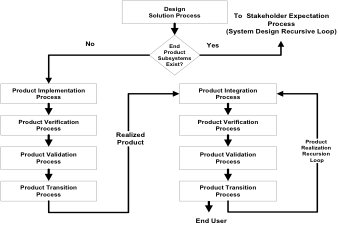

Figure C 5 - Sequencing of Product Realization Processes

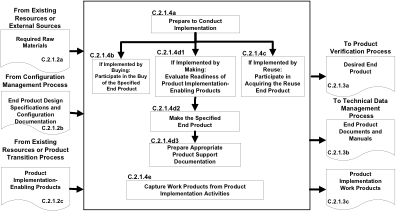

Figure C 6 - Product Implementation Process

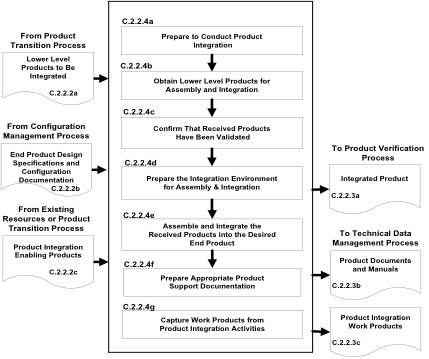

Figure C 7 - Product Integration Process

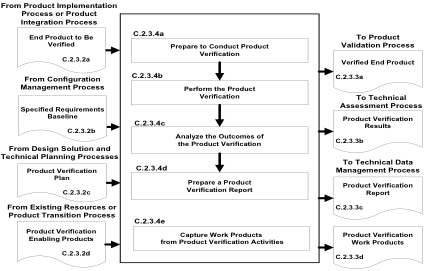

Figure C 8 - Product Verification Process

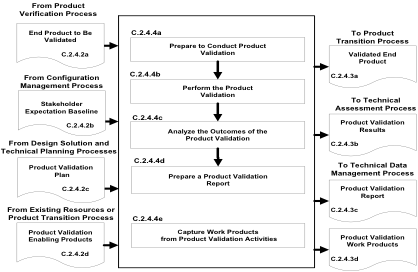

Figure C 9 - Product Validation Process

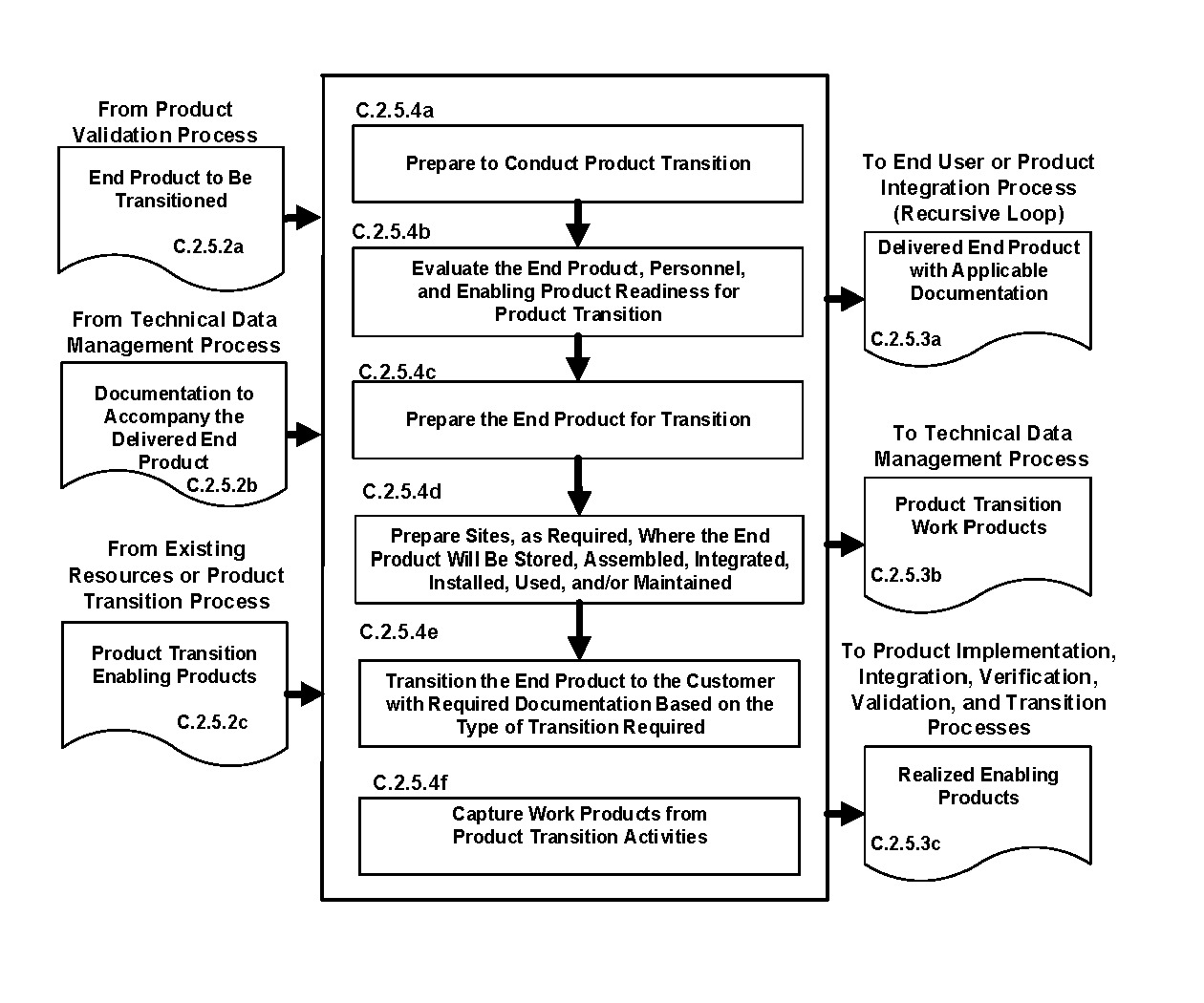

Figure C 10 - Product Transition Process

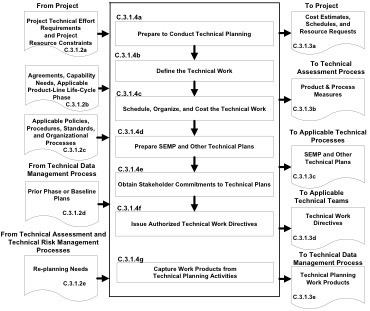

Figure C 11 - Technical Planning Process

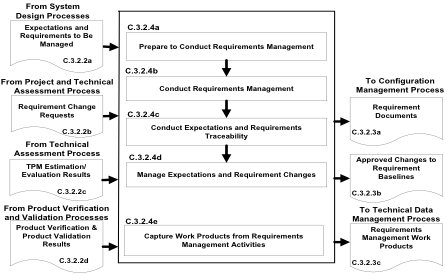

Figure C-12 - Requirements Management Process

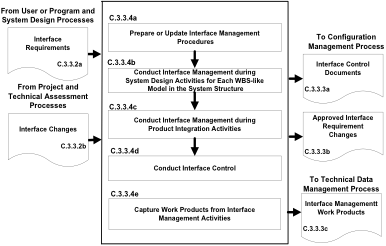

Figure C-13 - Interface Management Process

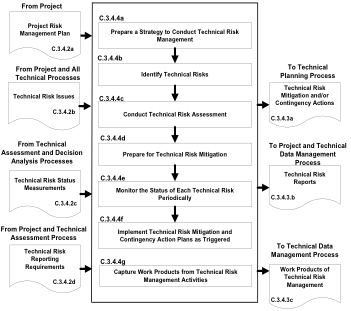

Figure C-14 - Technical Risk Management Process

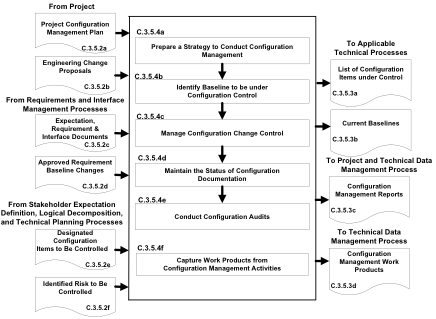

Figure C-15 - Configuration Management Process

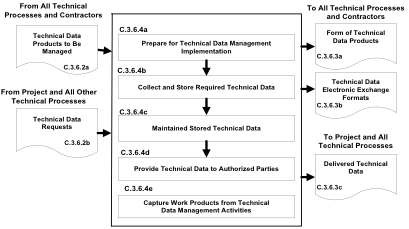

Figure C-16 - Technical Data Management Process

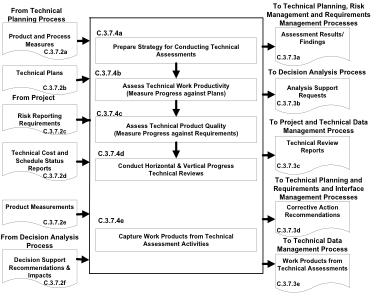

Figure C 17 - Technical Assessment Process

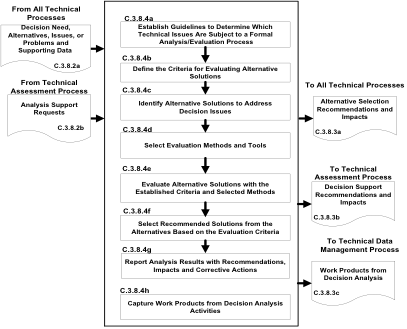

Figure C 18 - Decision Analysis Process

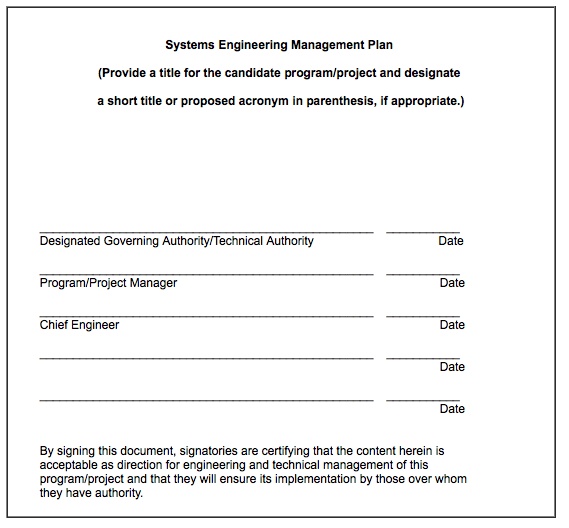

Figure D-1 - Systems Engineering Management Plan Title Page

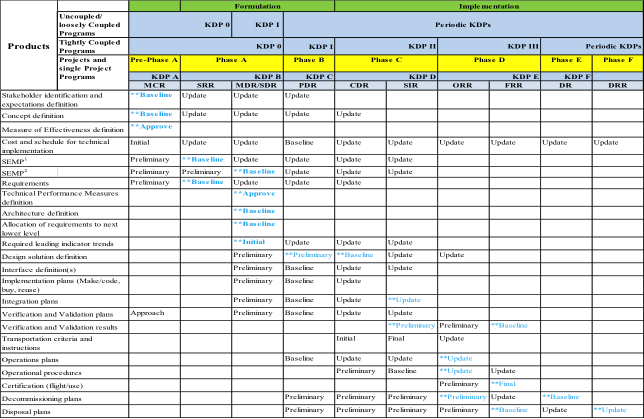

Table 5-1 - SE Product Maturity

Table G-1 - SRR Entrance and Success Criteria for a Program

Table G-2 - SDR Entrance and Success Criteria for a Program

Table G-3 - MCR Entrance and Success Criteria

Table G-4 - SRR Entrance and Success Criteria

Table G-5 - MDR/SDR Entrance and Success Criteria

Table G-6 - PDR Entrance and Success Criteria

Table G-7 - CDR Entrance and Success Criteria

Table G-8 - PRR Entrance and Success Criteria

Table G-9 - SIR Entrance and Success Criteria

Table G-10 - TRR Entrance and Success Criteria

Table G-11 - SAR Entrance and Success Criteria

Table G-12 - ORR Entrance and Success Criteria

Table G-13 - FRR Entrance and Success Criteria

Table G-14 - PLAR Entrance and Success Criteria

Table G-15 - CERR Entrance and Success Criteria

Table G-16 - PFAR Entrance and Success Criteria

Table G-17 - DR Entrance and Success Criteria

Table G-18 - Disposal Readiness Review Entrance and Success Criteria

Table G-19 - Peer Review Entrance and Success Criteria

Table G-20 - PIR/PSR Entrance and Success Criteria

Chg # |

Date |

Description/Comments |

|

1 |

03/07/14 |

Appendix E - Replaced Technology Readiness Level (TRL) definition table with TRL definition table that was in NPR 7120.8, Appendix J. |

2 |

05/27/14 |

Editorial changes to the definitions in Appendix A, Appendix E, TRL 6 Definition: System/sub-system model or prototype demonstration in a an operational relevant environment, updates to figures and text in Appendix C and Table G-2 |

3 |

4/13/15 | Changes made to Chapter 5, Appendix G - updated Figure 5-2-NASA Tightly Coupled Program Life Cycle and Figure C-10-Product Transition Process to NPR 7120.5E version, Appendix I, added NPD 8081.1, NASA Chemical Rocket Propulsion Testing, Chapter 5, appendices - revised notes on life-cycle figures and made editorial and formatting corrections in appendices including the definition of Entrance Criteria. |

4 |

10/23/17 | Update with 1400 compliance, changes to Appendix G to add spectrum management guidance products |

The purpose of this document is to clearly articulate and establish the requirements on the implementing organization for performing systems engineering. Systems Engineering (SE) is a logical systems approach performed by multidisciplinary teams to engineer and integrate NASA's systems to ensure NASA products meet customers' needs. Implementation of this systems approach will enhance NASA's core engineering capabilities while improving safety, mission success, and affordability. This systems approach is applied to all elements of a system (i.e., hardware, software, human system integration) and all hierarchical levels of a system over the complete project life cycle.

a. This NASA Procedural Requirement (NPR) applies to NASA Headquarters and NASA Centers, including component facilities and technical and service support centers. This NPR applies to NASA employees and NASA support contractors that use NASA processes to augment and support NASA technical work. This NPR applies to Jet Propulsion Laboratory, a Federally Funded Research and Development Center, other contractors, grant recipients, or parties to agreements only to the extent specified or referenced in the appropriate contracts, grants, or agreements. (See Chapter 4.)

b. This NPR applies to space flight, research and technology, and institutional programs and projects (including Information Technology (IT)), as appropriately tailored and customized for size and complexity. See Paragraph 2.2 for tailoring and customization descriptions.

c. In this document, a project is a specific investment having defined goals, objectives, requirements, life-cycle cost, a beginning, and an end. A project yields new or revised products or services that directly address NASA's strategic needs. Projects may be performed wholly in-house; by Government, industry, or academia partnerships; or through contracts with private industry.

d. The requirements enumerated in this document are applicable to all new programs and projects, as well as to all programs and projects currently in Formulation Phase as of the effective date of this document. (See NPR 7120.5, NPR 7120.7, and NPR 7120.8, as appropriate, for definitions of program phases.) This NPR also applies to programs and projects in their Implementation Phase as of the effective date of this document. For existing programs/projects regardless of their current phase, the Designated Governing Authority (DGA) may grant waivers/deviations allowing continuation of current practices that do not comply with all or sections of this NPR.

e. Many other discipline areas such as health and safety, medical, reliability, maintainability, quality assurance, IT, security, logistics, and environmental perform functions during project life-cycle phases that influence or are influenced by the engineering functions performed and need to be fully integrated with the engineering functions. The description of these disciplines and their relationship to the overall management life cycle are defined in other NASA directives; for example, the safety, reliability, maintainability, and quality assurance requirements are defined in the 8700 series of directives, and health and medical requirements are defined in the 8900 series. To that end, this document contains human systems integration language and requirements. (See NASA Standard 3001, NASA Space Flight Human System Standard, and NPR 8705.2, Human-Rating Requirements for Space Systems.)

f. In this NPR, all mandatory actions (i.e., requirements) are denoted by statements containing the term "shall." The requirements are explicitly shown as [SE-XX] for clarity and tracking purposes. The terms "may" or "can" denote discretionary privilege or permission, "should" denotes a good practice and is recommended but not required, "will" denotes expected outcome, and "are/is" denotes descriptive material.

g. In this NPR, all document citations are assumed to be the latest version, unless otherwise noted.

a. National Aeronautics and Space Act, as amended, 51 U.S.C. § 20113(a).

b. NPD 1000.0, NASA Governance and Strategic Management Handbook.

c. NPD 1000.3, The NASA Organization.

d. NPD 1001.0, NASA Strategic Plan.

e. NPD 7120.4, NASA Engineering and Program/Project Management Policy.

a. NPD 2570.5, NASA Electromagnetic Spectrum Management

b. NPR 2570.1, NASA Radio Frequency (RF) Spectrum Management Manual

c. NPR 7120.5, NASA Space Flight Program and Project Management Requirements.

d. NPR 7120.7, NASA Information Technology and Institutional Infrastructure Program and Project Management Requirements.

e. NPR 7120.8, NASA Research and Technology Program and Project Management Requirements.

f. NPR 7150.2, NASA Software Engineering Requirements.

g. NPR 8705.2, Human-Rating Requirements for Space Systems.

h. NASA-STD-3001, NASA Space Flight Human System Standard.

a. Compliance with this NPR will be documented by Center Directors in the SE NPR Compliance Matrix for Centers (Appendix H.1). Center self-assessment of compliance should be conducted approximately every two years or at the request of the Office of Chief Engineer (OCE). A copy of the Compliance Matrix is forwarded to the Office of the Chief Engineer. In addition, the OCE conducts periodic assessments at the Centers to obtain feedback on the effectiveness of NPR 7123.1 to facilitate updating the NPR.

b. Compliance for programs and projects is documented by appending a completed Compliance Matrix for this NPR (see Appendix H.2) to the Systems Engineering Management Plan (SEMP).

NID 7123-69, NASA Interim Directive (NID) NASA Systems Engineering Processes and Requirements, dated March 13, 2012.

NPR 7123.1A, NASA Systems Engineering Processes and Requirements, w/Change 1 (11/04/09), dated March 26, 2007.

1.1.1 Systems engineering at NASA requires the application of a systematic, disciplined engineering approach that is quantifiable, recursive, iterative, and repeatable for the development, operation, maintenance, and disposal of systems integrated into a whole throughout the life cycle of a project or program. The emphasis of systems engineering is on safely achieving stakeholder functional, physical, and operational performance requirements in the intended use environments over the system's planned life within cost and schedule constraints.

1.1.2 This document establishes the common technical processes for implementing NASA products and systems, as directed by NPD 7120.4, NASA Engineering and Program/Project Management Policy. Additionally, this NPR establishes the common NASA systems engineering technical model. This document complements the administration, management, and review of all programs and projects, as specified in NPR 7120.5, NASA Space Flight Program and Project Management Requirements, NPR 7120.7, NASA Information Technology and Institutional Infrastructure Program and Project Management Requirements, and NPR 7120.8, NASA Research and Technology Program and Project Management Requirements.

1.1.3 The processes described in this document build upon and apply best practices and lessons learned from NASA, other governmental agencies, and industry to clearly delineate a successful model to complete comprehensive technical work, reduce program and technical risk, and improve mission success. The requirements and practices established in this NPR should be tailored and customized, respectively, per Paragraph 2.2.

1.1.4 Precedence

The order of precedence in case of conflict between requirements is 51 USC 20113(a) (1), National Aeronautics and Space Act of 1958, as amended; NPD 1000.0, NASA Governance and Strategic Management Handbook; NPD 1000.3, The NASA Organization; NPD 7120.4, NASA Engineering and Program/Project Management Policy; and NPR 7123.1, NASA Systems Engineering Processes and Requirements.

1.1.5 Figures

1.1.5.1 Figures within this NPR are not intended to be prescriptive but notional.

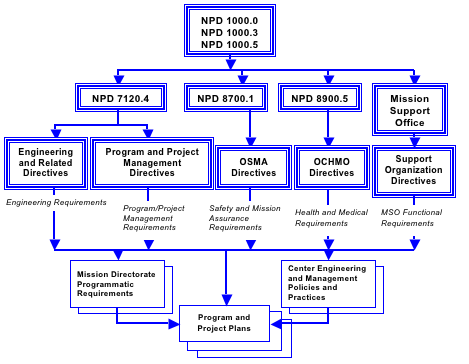

1.2.1 Institutional requirements are the responsibility of the institutional authorities. They focus on how NASA does business and are independent of any particular program or project. These requirements are issued by NASA Headquarters and by Center organizations, and are normally documented in NASA Policy Directives (NPDs), NASA Procedural Requirements (NPRs), NASA Standards, Center Policy Directives (CPDs), Center Procedural Requirements (CPRs), and Mission Directorate (MD) requirements. Figure 1-1 shows the flow down from NPD 1000.0, NASA Governance and Strategic Management Handbook, through Program and Project Plans.

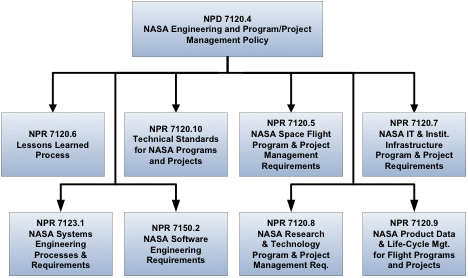

1.2.2 This NPR focuses on systems engineering processes and requirements. It is one of several related Engineering and Program/Project NPRs that flow down from NPD 7120.4, NASA Engineering and Program/Project Management Policy, as shown in Figure 1-2.

Figure 1-1 - Hierarchy of Related Documents

Figure 1-2 - Documentation Relationships

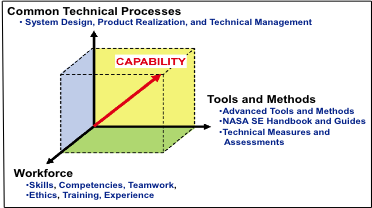

1.3.1. The common systems engineering framework consists of three elements that make up NASA systems engineering capability. The relationship of the three elements is illustrated in Figure 1-3. The integrated implementation of the three elements of the SE Framework is intended to improve the overall capability required for the efficient and effective engineering of NASA systems. The SE processes are one element of the larger context to produce quality products and achieve mission success. This NPR addresses the SE processes. The larger SE framework also includes the workforce and tools and methods. Additional initiatives to address these other elements include revision of the NASA handbook on systems engineering and development of tools and an assessment model. Together, these elements comprise the capability of an organization to perform successful SE. Each element is described below.

Figure 1 3 - SE Framework

1.3.2. Element 1: Common Technical Processes. The common technical processes of this NPR provide what has to be done to engineer system products within a project and why. These processes are applied to the hardware, software, and human systems integration of a system as one integrated whole. In addition to the common technical processes, contributions to improvements of SE capability also come from the inclusion of:

a. Concepts and terminology that are basic to consistent application and communication of the common technical processes Agency wide.

b. A structure for when the common technical processes are applied.

1.3.3. Element 2: Tools and Methods. Tools and methods enable the efficient and effective completion of the activities and tasks of the common technical processes. An essential contribution of this element to SE capability is the improvement of the engineering infrastructure through the three Agency-wide activities listed below:

a. Infusion of advanced methods and tools in the SE processes to achieve greater efficiency, collaboration, and communication among distributed teams.

b. Provision of a NASA handbook on SE methodologies (NASA/SP-2007-6105, NASA Systems Engineering Handbook) that is a source for various methods and procedures that Centers can draw upon to plan implementation of the required processes in their projects.

c. Measurement of the SE capability of projects within NASA and assessment of the improvements of capability resulting from implementation of the SE NPR, use of adopted methods and tools, and workforce engineering training.

1.3.4. Element 3: Workforce. A well-trained, knowledgeable, and experienced technical workforce is essential for improving SE capability. The workforce must be able to apply NASA and Center methods and tools for the completion of the required SE processes within the context of the program or project to which they are assigned. In addition, they must be able to effectively communicate requirements and solutions to customers, other engineers, and management to work efficiently and effectively on a team. Issues of recruitment, retention, and training are aspects included in this element. The OCE will facilitate the training of the NASA workforce on the application of this and associated NPRs.

1.4.1 This SE NPR is organized into the following chapters:

a. The Preface describes items such as the purpose, applicability, authority, and applicable documents of this SE NPR.

b. Chapter 1 (Introduction) describes the SE framework and document organization.

c. Chapter 2 describes the institutional and programmatic requirements, including roles and responsibilities. Tailoring of SE requirements and customization of SE practices are also addressed.

d. Chapter 3 describes the core set of common Agency-level technical processes and requirements for engineering NASA system products throughout the product life cycle. Appendix C contains supplemental amplifying material.

e. Chapter 4 describes the activities and requirements to be accomplished by assigned NASA technical teams or individuals (NASA employees and NASA support contractors) when performing technical oversight of a prime or other external contractor.

f. Chapter 5 describes the life-cycle and technical review requirements throughout the program and project life cycles. Appendix G contains entrance/success criteria guidance for each of the reviews.

g. Chapter 6 describes the Systems Engineering Management Plan, including the SEMP role, functions, and content. Appendix D provides details of a generic SEMP annotated outline.

2.1.1 General

The roles and responsibilities of senior management are defined in part in NPD 1000.0, NASA Governance and Strategic Management Handbook, and NPD 7120.4, NASA Engineering and Program/Project Management Policy. NPR 7120.5, NASA Space Flight Program and Project Management Requirements; NPR 7120.7, NASA Information Technology and Institutional Infrastructure Program and Project Management Requirements; NPR 7120.8, NASA Research and Technology Program and Project Management Requirements; and other NASA directives define the responsibilities of program and project managers. This NPR establishes systems engineering processes and responsibilities.

2.1.1.1 For programs and projects involving more than one Center, the lead organization will develop documentation for DGA approval to describe the hierarchy and reconciliation of Center plans implementing this NPR. The governing Mission Directorate or mission support office determines whether a Center executes a project in a lead role or in a supporting role. For Centers in supporting roles, compliance should be jointly negotiated and documented in the lead Center's project SEMP.

2.1.1.2 The roles and responsibilities associated with program and project management and Technical Authority (TA) are defined in the Program and Project Management NPRs (for example, NPR 7120.5 for space flight projects). Specific roles and responsibilities of the program/project manager and the engineering technical authority related to the SEMP are defined in Paragraphs 2.1.6 and 6.2.

2.1.2 Office of the Chief Engineer (OCE)

2.1.2.1 The OCE, under the authority of NPD 7120.4, will ensure compliance with this SE NPR.

2.1.2.2 The OCE will ensure systems engineering policies' compatibility across NASA.

2.1.3 Mission Directorate or Headquarters Program Offices

2.1.3.1 When programs and projects are managed at Headquarters or within Mission Directorates, that Program Office is responsible for the requirements in this NPR that are assigned to the Center Director. Technical teams residing at Headquarters will follow the requirements of this NPR unless specific process requirements have been established to implement this NPR by the governing organization. The technical teams residing at Centers will follow Center level process requirement documents.

2.1.4 Center Directors

2.1.4.1 In this document, the phrase "the Center Directors shall..." means the roles and responsibilities of the Center Directors may be further delegated within the organization to the scope and scale of the system.

2.1.4.2 The Center Director is responsible and accountable for both Institutional Authority responsibilities and the proper planning and execution of programs and projects assigned to the Center.

2.1.4.3 Center Directors shall perform the following activities:

a. Establish policies, procedures, and processes to execute the requirements of this SE NPR [SE-01].

b. Assess and take corrective actions to improve the execution of the requirements of this SE NPR [SE-02].

c. Select appropriate standards applicable to projects under their control [SE-03].

d. Complete the compliance matrix, as tailored, in Appendix H.1 for those requirements owned by the Office of Chief Engineer, and provide to the OCE upon request [SE-04].

2.1.5 Technical Teams

2.1.5.1 Each technical team executes the Center processes to implement this SE NPR under the oversight of the Center Directors in accordance with the SEMP. The makeup and organization of each technical team is the responsibility of each Center or program and includes the personnel required to implement the project.

2.1.5.2 For those requirements owned by Center Directors, the technical team shall complete the compliance matrix in Appendix H.2 and include it in the SEMP [SE-05].

2.1.5.3 For systems that contain software, the technical team ensures that software developed within NASA or acquired complies with NPR 7150.2, NASA Software Engineering Requirements.

Note 1: NPR 7150.2 elaborates on the requirements in this document and determines the applicability of requirements based on the Agency's software classification.

Note 2: NPD 7120.4 contains additional Agency requirements for the acquisition, development, maintenance, and management of software.

2.1.6 Designated Governing Authority

The Designated Governing Authority (DGA) for the technical effort in this SE NPR is the Center Director or the person that has been designated by the Center Director to ensure the appropriate level of technical management oversight. Such designation is made from the technical line so that independence between programmatic and technical authority is maintained. The DGA works with the Program/Project Manager to manage the technical effort. The DGA is assigned primary responsibility for evaluating the technical content of a particular program or project to ensure that it is meeting the commitments specified in the key technical management documents. The DGA shall approve the SEMP, waiver authorizations, and other key technical documents to ensure independent assessment of technical content [SE-06]. The DGA and the program/project manager approve the SEMP.

Note 1: For large programs/projects, the DGA will typically be the associated independently funded Engineering Technical Authority (ETA). In the case of very small projects, DGA responsibilities are occasionally delegated to line managers or other technical experts who are not independently funded and do not serve in an official ETA capacity. If the DGA is not a recognized ETA, an ETA at the appropriate level will be required to approve the SEMP to ensure compliance with the Agency's technical authority process.

Note 2: For NPR 7120.7 projects affecting more than one Center, the NASA Chief Information Officer (CIO) or the person the NASA CIO designates is the DGA.

2.2.1 Tailoring SE Requirements

2.2.1.1 SE requirements tailoring is the process used to seek relief from SE NPR requirements consistent with program or project objectives, acceptable risk, and constraints.

2.2.1.2 The tailoring process (which can occur at any time in the program or project's life cycle) results in deviations or waivers to requirements depending on the timing of the request. Deviations and waivers of the requirements in this NPR can be submitted separately to the requirements owner or via the appropriate compliance matrix in Appendix H.

2.2.1.3 The results of a Center's tailoring of NPR 7123.1 SE requirements will be documented in the Compliance Matrix for Centers (Appendix H.1) and submitted to OCE upon request or as changes to the Center processes occur.

2.2.1.4 The results of the program/project Technical Team tailoring of SE requirements from either NPR 7123.1 or a particular Center's implementation of NPR 7123.1, whichever is applicable, will be documented in the next revision of the SEMP, along with supporting rationale and documented approvals from the requirement owner.

2.2.1.5 The appropriate requirement owner, as described in the compliance matrices (Appendix H) will have responsibility to approve or disapprove any SE NPR requirement that is tailored.

2.2.2 Customization of SE Practices

2.2.2.1 Customization is the modification of recommended SE practices that are used to accomplish the SE requirements. Examples of these practices are in Appendix C or in NASA/SP-2007-6105.

2.2.2.2 Technical teams are encouraged to customize their non-requirement SE practices. The results of this customization do not require waivers or deviations, but significant customization should be documented in the SEMP.

2.2.3 Considerations for Tailoring or Customization

2.2.3.1 Considerations for tailoring or customization should include: scope and visibility (e.g., organizations and partnerships involved, international agreements); risk tolerance and failure consequences; system size; system complexity (e.g., human spaceflight vs. flagship science vs. subscale technology demonstration, number of stages and interfaces, technology readiness level); impact on other systems; longevity; serviceability (including on-orbit); constraints (including cost, schedule, degree of insight/oversight permitted with partnerships or international agreements, etc.); safety; technology base; and industrial base.

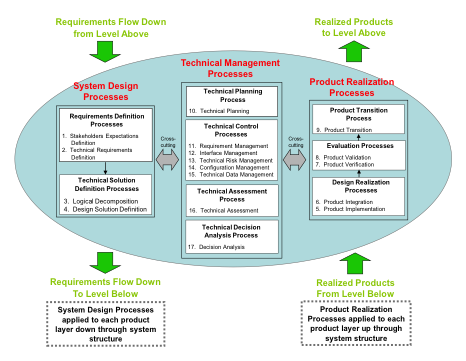

3.1.1 This chapter establishes the core set of common technical processes and requirements to be used by NASA projects in engineering system products during all life-cycle phases to meet phase exit criteria and project objectives. The 17 common technical processes are enumerated according to their description in this chapter and their interactions shown in Figure 3-1. This SE common technical processes model illustrates the use of: (1) the system design processes for "top-down" design of each product in the system structure; (2) the product realization processes for "bottom-up" realization of each product in the system structure; and (3) the cross-cutting technical management processes for planning, assessing, and controlling the implementation of the system design and product realization processes and to guide technical decision making (decision analysis). The SE common technical processes model is referred to as an "SE engine" in this SE NPR to stress that these common technical processes are used to drive the development of the system products and associated work products required by management to satisfy the applicable product life-cycle phase exit criteria while meeting stakeholder expectations within cost, schedule, and risk constraints.

3.1.2 This chapter identifies the following for each of the 17 common technical processes:

a. The specific requirement for Center Directors or designees to establish and maintain the process;

b. A brief description of how the process is used as an element of the Systems Engineering Engine; and

c. A reference to typical practices for the process as identified in Appendix C.

3.1.3 It should be emphasized that the Practices for Common Technical Processes documented in Appendix C do not represent additional requirements that must be implemented by the technical team. Appendix C is provided as a summary of typical best practices associated with the 17 common technical processes. As such, it should be considered in conjunction with other sources of systems engineering guidance such as NASA/SP-2007-6105, NASA Systems Engineering Handbook, as the technical team develops a customized approach for the application of these processes consistent with requirements implemented by Center documentation.

Figure 3 1 - Systems Engineering (SE) Engine

3.1.4 The context in which the common technical processes are used is provided below:

3.1.4.1 The common technical processes are applied to each product layer to concurrently develop the products that will satisfy the operational or mission functions of the system (end products) and that will satisfy the life-cycle support functions of the system (enabling products). In this document, product layer is defined as the product breakdown hierarchy that includes both the end product and enabling product hierarchy. The enabling products facilitate the activities of system design, product realization, operations and mission support, sustainment, and end-of-product-life disposal or recycling, by having the products and services available when needed.

3.1.4.2 The common technical processes are applied to design a system solution definition for each product layer down and across each level of the system structure and to realize the product layer end products up and across the system structure. Figure 3-2 illustrates how the three major sets of processes of the Systems Engineering (SE) Engine (system design processes, product realization processes, and technical management processes) are applied to each product layer within a system structure.

Figure 3-2 - Application of SE Engine Common Technical Processes

Within System Structure

3.1.4.3 The common technical processes are used to define the product layers of the system structure in each applicable phase of the relevant life cycle to generate work products and system products needed to satisfy the exit criteria of the applicable phase.

3.1.4.4 The common technical processes are applied by assigned technical teams and individuals trained in the requirements of this SE NPR.

3.1.4.5 The assigned technical teams and individuals are using the appropriate and available sets of tools and methods to accomplish required common technical process activities. This includes the use of modeling and simulation as applicable to the product phase, location of the product layer in the system structure, and the applicable phase exit criteria.

3.2.1 For this section, "establish" means developing policy, work instructions, or procedures to implement process activities. "Maintain" includes planning the process, providing resources, assigning responsibilities, training people, managing configurations, identifying and involving stakeholders, and monitoring and controlling the process. The technical team is responsible for the execution of these 17 required processes per Paragraph 2.1.5.

3.2.2 Stakeholder Expectations Definition Process

3.2.2.1 Center Directors or designees shall establish and maintain a Stakeholder Expectations Definition process to include activities, requirements, guidelines, and documentation for the definition of stakeholder expectations for the applicable product layer [SE-07].

3.2.2.2 The stakeholder expectations definition process is used to elicit and define use cases, scenarios, concept of operations, and stakeholder expectations for the applicable product life- cycle phases and product layer. This includes expectations such as: (a) operational end products and life-cycle-enabling products of the product layer; (b) affordability; (c) operator or user interfaces; (d) expected skills and capabilities of operators or users; (e) expected number of simultaneous users; (f) system and human performance criteria; (g) technical authority, standards, regulations, and laws; (h) factors such as health and safety, planetary protection, orbital debris, quality, security, context of use by humans, reliability, availability, maintainability, electromagnetic compatibility, interoperability, testability, transportability, supportability, usability, and disposability; and (i) local management constraints on how work will be done (e.g., operating procedures). The baselined stakeholder expectations are used for validation of the product layer end product during product realization. At this point, Measures of Effectiveness (MOEs) are defined.

3.2.2.3 Typical practices of this process are defined in Appendix C.1.1.

3.2.3 Technical Requirements Definition Process

3.2.3.1 Center Directors or designees shall establish and maintain a Technical Requirements Definition process to include activities, requirements, guidelines, and documentation for the definition of technical requirements from the set of agreed upon stakeholder expectations for the applicable product layer [SE-08].

3.2.3.2 The technical requirements definition process is used to transform the baselined stakeholder expectations into unique, quantitative, and measurable technical requirements expressed as "shall" statements that can be used for defining a design solution for the product layer end product and related enabling products. This process also includes validation of the requirements to ensure that the requirements are well-formed (clear and unambiguous), complete (agrees with customer and stakeholder needs and expectations), consistent (conflict free), and individually verifiable and traceable to a higher level requirement or goal. As part of this process, Measures of Performance (MOPs) and Technical Performance Measures (TPMs) are defined.

3.2.3.3 Typical practices of this process are defined in Appendix C.1.2.

3.2.4 Logical Decomposition Process

3.2.4.1 Center Directors or designees shall establish and maintain a Logical Decomposition process to include activities, requirements, guidelines, and documentation for logical decomposition of the validated technical requirements of the applicable product layer [SE-09].

3.2.4.2 The logical decomposition process is used to improve understanding of the defined technical requirements and the relationships among the requirements (e.g., functional, behavioral, performance, and temporal) and to transform the defined set of technical requirements into a set of logical decomposition models and their associated set of derived technical requirements for lower levels of the system and for input to the design solution definition process.

3.2.4.3 Typical practices of this process are defined in Appendix C.1.3.

3.2.5 Design Solution Definition Process

3.2.5.1 Center Directors or designees shall establish and maintain a Design Solution Definition process to include activities, requirements, guidelines, and documentation for designing product solution definitions within the applicable product layer that satisfy the derived technical requirements [SE-10].

3.2.5.2 The design solution definition process is used to translate the outputs of the logical decomposition process into a design solution definition that is in a form consistent with the product life-cycle phase and product layer location in the system structure and that will satisfy phase exit criteria. This includes transforming the defined logical decomposition models and their associated sets of derived technical requirements into alternative solutions, then analyzing each alternative to be able to select a preferred alternative, and fully defining that alternative into a final design solution definition that will satisfy the requirements.

3.2.5.3 These design solution definitions will be used for generating end products either by using the product implementation process or product integration process as a function of the position of the product layer in the system structure and whether there are additional subsystems of the end product that need to be defined. The output definitions from the design solution (end product specifications) will be used for conducting product verification.

3.2.5.4 Typical practices of this process are defined in Appendix C.1.4.

3.2.6 Product Implementation Process

3.2.6.1 Center Directors or designees shall establish and maintain a Product Implementation process to include activities, requirements, guidelines, and documentation for implementation of a design solution definition by making, buying, or reusing an end product of the applicable product layer [SE-11].

3.2.6.2 The product implementation process is used to generate a specified product of a product layer through buying, making, or reusing in a form consistent with the product life-cycle phase exit criteria and that satisfies the design solution definition (e.g., drawings, specifications).

3.2.6.3 Typical practices of this process are defined in Appendix C.2.1.

3.2.7 Product Integration Process

3.2.7.1 Center Directors or designees shall establish and maintain a Product Integration process to include activities, requirements, guidelines, and documentation for the integration of lower level products into an end product of the applicable product layer in accordance with its design solution definition [SE-12].

3.2.7.2 The product integration process is used to transform lower level, validated end products into the desired end product of the higher level product layer through assembly and integration.

3.2.7.3 Typical practices of this process are defined in Appendix C.2.2.

3.2.8 Product Verification Process

3.2.8.1 Center Directors or designees shall establish and maintain a Product Verification process to include activities, requirements/specifications, guidelines, and documentation for verification of end products generated by the product implementation process or product integration process against their design solution definitions [SE-13].

3.2.8.2 The product verification process is used to demonstrate that an end product generated from product implementation or product integration conforms to its design solution definition requirements as a function of the product life-cycle phase and the location of the product layer end product in the system structure. Special attention is given to demonstrating satisfaction of the MOPs defined for each MOE during conduct of the technical requirements definition process.

3.2.8.3 Typical practices of this process are defined in Appendix C.2.3.

3.2.9 Product Validation Process

3.2.9.1 Center Directors or designees shall establish and maintain a Product Validation process to include activities, requirements, guidelines, and documentation for validation of end products generated by the product implementation process or product integration process against their stakeholder expectations [SE-14].

3.2.9.2 The product validation process is used to confirm that a verified end product generated by product implementation or product integration fulfills (satisfies) its intended use when placed in its intended environment and to ensure that any anomalies discovered during validation are appropriately resolved prior to delivery of the product (if validation is done by the supplier of the product) or prior to integration with other products into a higher level assembled product (if validation is done by the receiver of the product). The validation is done against the set of baselined stakeholder expectations. Special attention should be given to demonstrating satisfaction of the MOEs identified during conduct of the stakeholder expectations definition process. The type of product validation is a function of the form of the product and product life-cycle phase and in accordance with an applicable customer agreement.

3.2.9.3 Typical practices of this process are defined in Appendix C.2.4.

3.2.10 Product Transition Process

3.2.10.1 Center Directors or designees shall establish and maintain a Product Transition process to include activities, requirements, guidelines, and documentation for transitioning end products to the next higher level product layer customer or user [SE-15].

3.2.10.2 The product transition process is used to transition a verified and validated end product that has been generated by product implementation or product integration to the customer at the next level in the system structure for integration into an end product or, for the top level end product, transitioned to the intended end user. The form of the product transitioned will be a function of the product life-cycle phase and the location within the system structure of the product layer in which the end product exists.

3.2.10.3 Typical practices of this process are defined in Appendix C.2.5.

3.2.11 Technical Planning Process

3.2.11.1 Center Directors or designees shall establish and maintain a Technical Planning process to include activities, requirements, guidelines, and documentation for planning the technical effort [SE-16].

3.2.11.2 The technical planning process is used to plan for the application and management of each common technical process. It is also used to identify, define, and plan the technical effort applicable to the product life-cycle phase for product layer location within the system structure and to meet project objectives and product life-cycle phase exit criteria. A key document generated by this process is the SEMP (See Chapter 6).

3.2.11.3 Typical practices of this process are defined in Appendix C.3.1.

3.2.12 Requirements Management Process

3.2.12.1 Center Directors or designees shall establish and maintain a Requirements Management process to include activities, requirements, guidelines, and documentation for management of requirements throughout the system life cycle [SE-17].

3.2.12.2 The requirements management process is used to: (a) manage the product requirements identified, baselined, and used in the definition of the product layer products during system design; (b) provide bidirectional traceability back to the top product layer requirements; and (c) manage the changes to established requirement baselines over the life cycle of the system products.

3.2.12.3 Typical practices of this process are defined in Appendix C.3.2.

3.2.13 Interface Management Process

3.2.13.1 Center Directors or designees shall establish and maintain an Interface Management process to include activities, requirements, guidelines, and documentation for management of the interfaces defined and generated during the application of the system design processes [SE-18].

3.2.13.2 The interface management process is used to: (a) establish and use formal interface management to assist in controlling system product development efforts when the efforts are divided between Government programs, contractors, and/or geographically diverse technical teams within the same program or project; and (b) maintain interface definition and compliance among the end products and enabling products that compose the system, as well as with other systems with which the end products and enabling products must interoperate.

3.2.13.3 Typical practices of this process are defined in Appendix C.3.3.

3.2.14 Technical Risk Management Process

3.2.14.1 Center Directors or designees shall establish and maintain a Technical Risk Management process to include activities, requirements, guidelines, and documentation for management of the risk identified during the technical effort [SE-19].

3.2.14.2 The technical risk management process is used to make risk-informed decisions and examine, on a continuing basis, the potential for deviations from the project plan and the consequences that could result should they occur. This enables risk-handling activities to be planned and invoked as needed across the life of the product or project to mitigate impacts on achieving product life-cycle phase exit criteria and meeting technical objectives. The technical team supports the development of potential health and safety, cost, and schedule impacts for identified technical risks and any associated mitigation strategies. NPR 8000.4, Agency Risk Management Procedural Requirements, is to be used as a source document for defining this process and NPR 8705.5, Technical Probabilistic Risk Assessment (PRA) Procedures for Safety and Mission Success for NASA Programs and Projects, provides one means of identifying and assessing technical risk. While the focus of this requirement is the management of technical risk, the process applies to the management of programmatic risks as well. The highly interdependent nature of health and safety, technical, cost, and schedule risks require the broader program/project team to consistently address risk management with an integrated approach. NASA/SP-2011-3422, NASA Risk Management Handbook, provides guidance for managing risk in an integrated fashion.

3.2.14.3 Typical practices of this process are defined in Appendix C.3.4.

3.2.15 Configuration Management Process

3.2.15.1 Center Directors or designees shall establish and maintain a Configuration Management process to include activities, requirements, guidelines, and documentation for configuration management [SE-20].

3.2.15.2 The configuration management process for end products, enabling products, and other work products placed under configuration control is used to: (a) identify the configuration of the product or work product at various points in time; (b) systematically control changes to the configuration of the product or work product; (c) maintain the integrity and traceability of the configuration of the product or work product throughout its life; and (d) preserve the records of the product or end product configuration throughout its life cycle, dispositioning them in accordance with NPR 1441.1, NASA Records Retention Schedules.

3.2.15.3 Typical practices of this process are defined in Appendix C.3.5.

3.2.16 Technical Data Management Process

3.2.16.1 Center Directors or designees shall establish and maintain a Technical Data Management process to include activities, requirements, guidelines, and documentation for management of the technical data generated and used in the technical effort [SE-21].

3.2.16.2 The technical data management process is used to plan for, acquire, access, manage, protect, and use data of a technical nature to support the total life cycle of a system. This process is used to capture trade studies, cost estimates, technical analyses, reports, and other important information.

3.2.16.3 Typical practices of the technical data management process are defined in Appendix C.3.6.

3.2.17 Technical Assessment Process

3.2.17.1 Center Directors or designees shall establish and maintain a Technical Assessment process to include activities, requirements, guidelines, and documentation for making assessments of the progress of planned technical effort and progress toward requirements satisfaction [SE-22].

3.2.17.2 The technical assessment process is used to help monitor progress of the technical effort and provide status information for support of the system design, product realization, and technical management processes. A key aspect of the technical assessment process is the conduct of life-cycle and technical reviews throughout the system life cycle in accordance with Chapter 5.

3.2.17.3 Typical practices of this process are defined in Appendix C.3.7.

3.2.18 Decision Analysis Process

3.2.18.1 Center Directors or designees shall establish and maintain a Decision Analysis process to include activities, requirements, guidelines, and documentation for making technical decisions [SE-23].

3.2.18.2 The decision analysis process, including processes for identification of decision criteria, identification of alternatives, analysis of alternatives, and alternative selection, is applied to technical issues to support their resolution. It considers relevant data (e.g., engineering performance, quality, and reliability) and associated uncertainties. Decision analysis is used throughout the system life cycle to formulate candidate decision alternatives and evaluate their impacts on health and safety, technical, cost, and schedule performance. NASA/SP-2010-576, NASA Risk-informed Decision Making Handbook, provides guidance for analyzing decision alternatives in a risk-informed fashion.

3.2.18.3 Typical practices of this process are defined in Appendix C.3.8.

4.1.1 Oversight/insight of projects where prime or other external contractors do the majority of the development effort has always been an important part of NASA programs and projects. "Insight" is a monitoring activity, whereas "oversight" is an exercise of authority by the Government. The Federal Acquisition Regulation and the NASA Supplement to the Federal Acquisition Regulation govern the acquisition planning, contract formation, and contract administration process. Authority to interface with the contractor can only be delegated by the contracting officer. The activities listed in Paragraph 4.2 will be coordinated with the cognizant contracting officer. Detailed definitions for insight and oversight are provided in the NASA Federal Acquisition Regulation Supplement, subpart 1846.4, Government Contract Quality Assurance.

4.1.2 This chapter defines a minimum set of technical activities and requirements for a NASA project technical team to perform before contract award, during contract performance, and upon completion of the contract on projects where prime or external contractors do the majority of the development effort. These activities and requirements are intended to supplement the common technical process activities and requirements of Chapter 3 and thus enhance the outcome of the contracted effort.

4.2.1 The NASA technical team shall define the engineering activities for the periods before contract award, during contract performance, and upon contract completion in the SEMP [SE-24]. The content of Appendix D should be used as a guide.

4.2.2 The NASA technical team shall use common technical processes, as implemented by the Center's documentation, to establish the technical inputs to the Request for Proposal (RFP) appropriate for the product to be developed, including product requirements and Statement of Work tasks [SE-25].

4.2.3 The NASA technical team shall determine the technical work products to be delivered by the offeror or contractor, to include a contractor SEMP that specifies the contractor's systems engineering approach for requirements development; technical solution definition; design realization; product evaluation; product transition; and technical planning, control, assessment, and decision analysis [SE-26].

4.2.4 The NASA technical team shall provide the requirements for technical insight and oversight activities planned in the NASA SEMP to the contracting officer for inclusion in the RFP [SE-27].

Note: Care should be taken that no requirements or solicitation information is divulged prior to the release of the solicitation by the contracting officer.

4.2.5 The NASA technical team shall have representation in the evaluation of offeror proposals in accordance with applicable NASA and Center source selection procedures [SE-28].

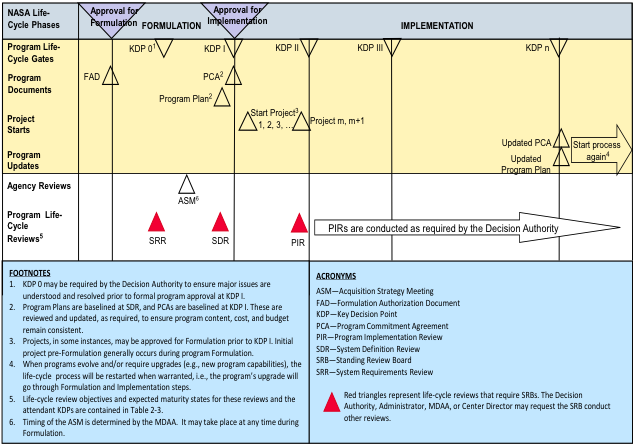

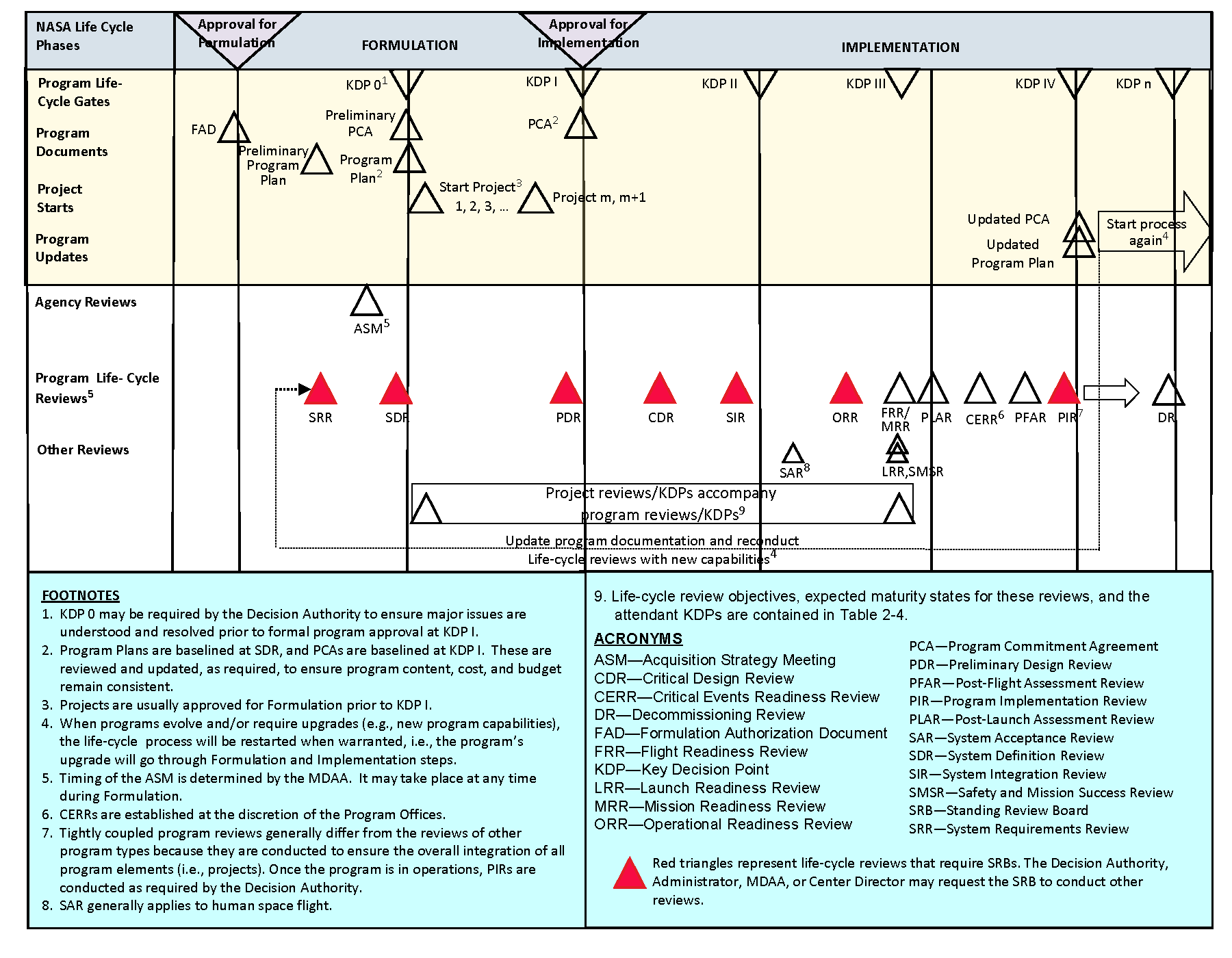

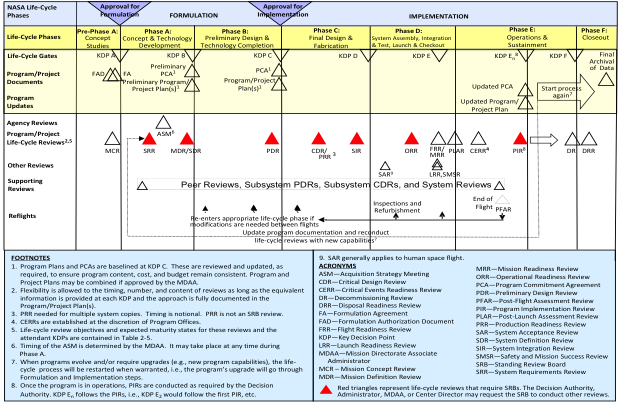

5.1.1 NASA defines four types of programs that may contain projects: (1) uncoupled programs; (2) loosely coupled programs; (3) tightly coupled programs; and (4) single-project programs. Which life cycle a program/project uses will be dependent on what type of program/project it is and whether the program/project is producing products for space flight, advanced development, information technology, construction of facilities, or other applications. A specific life cycle may be required by associated project management NPRs. For example, NPR 7120.5 defines the life cycles for space flight programs and projects. NPR 7120.7 defines life cycles for IT and institutional program/projects. For Announcement of Opportunity (AO) driven projects, refer to NPR 7120.5, Paragraph 2.2.7.1. For purposes of illustration, life cycles from NPR 7120.5 are repeated here in Figures 5-1 through 5-4.

5.1.2 The application of the common technical processes within each life-cycle phase produces technical results that provide inputs to life-cycle and technical reviews and support informed management decisions for progressing to the next life-cycle phase.

5.1.3 Each program and project will perform the life-cycle reviews as required by their governing project management NPR, applicable Center practices, and the requirements of this document. These reviews provide a periodic assessment of the program's or project's technical and programmatic status and health at key points in the life cycle. The technical team provides the technical inputs to be incorporated into the overall program/project review package. Appendix G provides guidelines for the entrance and exit criteria for each of these reviews with a focus on the technical products. Additional programmatic products may also be required by the governing program/project NPR. Programs/projects are expected to customize the entrance/exit criteria as appropriate to the size/complexity and unique needs of their activities.

5.1.4 The progress between life-cycle phases is marked by key decision points (KDPs). At each KDP, management examines the maturity of the technical aspects of the project. For example, management examines whether the resources (staffing and funding) are sufficient for the planned technical effort, whether the technical maturity has evolved, what the technical and nontechnical internal issues and risks are, and whether the stakeholder expectations have changed. If the technical and management aspects of the project are satisfactory, including the implementation of corrective actions, then the project can be approved to proceed to the next phase. Program and Project Management NPRs (NPR 7120.5, NPR 7120.7, and NPR 7120.8) contain further details relating to life-cycle progress.

Note: For example only. Refer to NPR 7120.5 for the official life cycle. Table 2-3 in the above references is in NPR 7120.5.

Figure 5 1 - NASA Uncoupled and Loosely Coupled Program Life Cycle

Note: For example only. Refer to NPR 7120.5 for the official life cycle. Table 2-3 in the above references is in NPR 7120.5.

Figure 5-2 - NASA Tightly Coupled Program Life Cycle

Note: For example only. Refer to NPR 7120.5 for the official life cycle. Table 2-3 in the above references is in NPR 7120.5.

Figure 5-3 - NASA Single-Project Program Life Cycle

Note: For example only. Refer to NPR 7120.5 for the official life cycle. Table 2-3 in the above references is in NPR 7120.5.

Figure 5 4 - The NASA Project Life Cycle

5.1.5 Life-cycle reviews are event based and occur when the entrance criteria for the applicable review are satisfied. (Appendix G provides guidance.) They occur based on the maturity of the relevant technical baseline as opposed to calendar milestones (e.g., the quarterly progress review, the yearly summary).

5.1.6 Accurate assessment of technology maturity is critical to technology advancement and its subsequent incorporation into operational products. The program/project ensures that Technology Readiness Levels (TRLs) and/or other measures of technology maturity are used to assess maturity throughout the life cycle of the project. When other measures of technology maturity are used, they should be mapped back to TRLs. The definition of the TRLs for hardware and software are defined in Appendix E. Moving to higher levels of maturity requires an assessment of a range of capabilities for design, analysis, manufacture, and test. Measures for assessing technology maturity are described in NASA/SP-2007-6105, NASA Systems Engineering Handbook. The initial maturity assessment is done in the Formulation phase and updated at project status reviews.

5.2.1 Planning and Conduct

5.2.1.1 The technical team shall develop and document plans for life-cycle and technical reviews for use in the project planning process [SE-32]. The life-cycle and technical review schedule, as documented in the SEMP, will be reflected in the overall project plan. The results of each life-cycle and technical review will be used to update the technical review plan as part of the SEMP update process. The review plans, data, and results should be maintained and dispositioned as Federal records.

5.2.1.2 The technical team ensures that system aspects represented or implemented in software are included in all life-cycle and technical reviews to demonstrate that project technical goals and progress are being achieved and that all software review requirements are implemented. Software review requirements are provided in NPR 7150.2, with guidance provided in NASA-HDBK-2203, NASA Software Engineering Handbook.

5.2.1.3 The technical team shall conduct the life-cycle and technical reviews as indicated in the governing project management NPR [SE-33]. Additional description of technical reviews is provided in NASA/SP-2007-6105, NASA Systems Engineering Handbook. (For requirements on program and project life cycles and management reviews, see the appropriate NPR, e.g., NPR 7120.5.)

5.2.1.4 The technical team shall participate in the development of entrance and success criteria for each of the respective reviews [SE-34]. The technical team should utilize the best practices defined in Appendix G as well as Center best practices for defining entrance and success criteria.

5.2.1.5 The technical team shall provide the following minimum products at the associated milestone review at the indicated maturity level:

a. Mission Concept Review (MCR):

(1) Baselined stakeholder identification and expectation definitions [SE-35].

(2) Baselined concept definition [SE-36].

(3) Approved MOE definition [SE-37].

b. System Requirements Review (SRR):

(1) Baselined SEMP for projects, single-project programs, and one-step AO programs [SE-38].

(2) Baselined requirements [SE-39].

c. Mission Definition Review/System Definition Review (MDR/SDR):

(1) Approved TPM definitions [SE-40].

(2) Baselined architecture definition [SE-41].

(3) Baselined allocation of requirements to next lower level [SE-42].

(4) Initial trend of required leading indicators [SE-43].

(5) Baseline SEMP for uncoupled, loosely coupled, tightly coupled, and two-step AO programs [SE-44].

d. Preliminary Design Review (PDR):

(1) Preliminary design solution definition [SE-45].

e. Critical Design Review (CDR):

(1) Baseline detailed design [SE-46].

f. System Integration Review (SIR):

(1) Updated integration plan [SE-47].

(2) Preliminary verification and validation (VandV) results [SE-48].

g. Operational Readiness Review (ORR):

(1) Updated operational plans [SE-49].

(2) Updated operational procedures [SE-50].

(3) Preliminary decommissioning plans [SE-51].

h. Flight Readiness Review (FRR):

(1) Baseline disposal plans [SE-52].

(2) Baseline VandV results [SE-53].

(3) Final certification for flight/use [SE-54].

i. Decommissioning Review (DR):

(1) Baseline decommissioning plans [SE-55].

j. Disposal Readiness Review (DRR):

(1) Updated disposal plans [SE-56].

5.2.1.6 Table 5-1 shows the maturity of primary SE products at the associated milestone reviews for all types and sizes of programs/projects. The required SE products identified above are highlighted in blue in the table. For further description of the primary SE products, refer to Appendix G. For additional guidance on software product maturity for project life-cycle reviews, refer to NASA-HDBK-2203, NASA Software Engineering Handbook. Additional programmatic products are required by the governing program/project management NPRs, but not listed herein.

5.2.1.7 The expectation for products identified as "baselined" in Paragraph 5.2.1.5 and Table 5-1 is that they will be at least final drafts going into the designated life-cycle review. Subsequent to the review, the final draft will be updated in accordance with approved review comments, Review Item Discrepancies, or Requests for Action and formally baselined.

5.2.1.8 Terms for maturity levels of technical products identified in this section are addressed in detail in Appendix F.

5.2.1.9 The technical team ensures that each program or project hosting equipment, experiments, or payloads with radio frequency (RF) requirements include success criteria in all life-cycle and technical reviews to receive approval from the responsible Center spectrum manager that program or project spectrum goals and progress are being achieved and satisfy all spectrum regulatory requirements. Spectrum certification requirements are provided in NPD 2570.5 and NPR 2570.1. NPR 2570.1 takes precedence over this document regarding spectrum related procedures and processes.

Table 5-1 - SE Product Maturity

** Item is a required product for that review.

1For projects, single-project programs, and one-step AO programs.

2For uncoupled, tightly coupled, loosely coupled programs, and two-step AO programs.

5.2.2 Review Process and Practices

5.2.2.1 For each type of program/project, technical efforts are monitored throughout the life cycle to ensure that the technical goals of the project are being achieved and that the technical direction of the project is appropriate.

5.2.2.2 Technical teams shall monitor technical effort through periodic technical status reviews [SE-57].

5.2.2.3 A technical status review is an evaluation of the project, or element thereof, by the technical team and other knowledgeable participants for the purposes of:

a. Assessing the status of and progress toward accomplishing the planned activities.

b. Validating the technical tradeoffs explored and design solutions proposed.

c. Identifying technical weaknesses or marginal design and potential problems (risks) and recommending improvements and corrective actions.

d. Making judgments on the activities' readiness for the follow-on events, including additional future evaluation milestones to improve the likelihood of a successful outcome.

e. Making assessments and recommendations to the project team, Center, and Agency management.

f. Providing a historical record of decisions that were made during these formal reviews which can be referenced at a later date.

g. Assessing the technical risk status and current risk profile.

5.3.1 Reviews are considered complete when the following are accomplished:

a. Agreement exists for the disposition of all Review Item Discrepancies (RIDs) and Request for Actions (RFAs).

b. The review board report and minutes are complete and distributed.

c. Agreement exists on a plan to address the issues and concerns in the review board's report.

d. Agreement exists on a plan for addressing the actions identified out of the review.

e. Liens against the review results are closed, or an adequate and timely plan exists for their closure.

f. Differences of opinion between the project under review and the review board(s) have been resolved, or a timely plan exists to resolve the issues.

g. A report is given by the review board chairperson to the appropriate management and governing program management committees (PMCs) charged with oversight of the project.

h. Appropriate procedures and controls are instituted to ensure that all actions from reviews are followed and verified through implementation to closure.

i. The Program/Project Decision Authority signs a decision memo documenting successful completion of the review.

6.1.1 A Systems Engineering Management Plan (SEMP) is used to establish the technical content of the engineering work early in the Formulation phase for each project and updated as needed throughout the project life cycle. The SEMP provides the specifics of the technical effort and describes what technical processes will be used, how the processes will be applied using appropriate activities, how the project will be organized to accomplish the activities, and the resources required for accomplishing the activities. The process activities are driven by the critical events during any phase of a life cycle (including operations) that set the objectives and work product outputs of the processes and how the processes are integrated. (See Appendix D for an annotated outline for the SEMP.) The SEMP provides the communication bridge between the project management team and the technical implementation teams. It also facilitates effective communication within the technical teams. The SEMP provides the framework to realize the appropriate work products that meet the entry and exit criteria of the applicable project life-cycle phases to provide management with necessary information for assessing technical progress.

6.1.2 The primary function of the SEMP is to provide the basis for implementing the technical effort and communicating what will be done and by whom, when, where, cost drivers, and why it is being done. In addition, the SEMP identifies the roles and responsibility interfaces of the technical effort and how those interfaces will be managed.

6.1.3 The SEMP is the vehicle that documents and communicates the technical approach, including the application of the common technical processes; resources to be used; and key technical tasks, activities, and events along with their metrics and success criteria. The SEMP communicates the technical effort that will be performed by the assigned technical team to the team itself, managers, customers, and other stakeholders. Whereas the primary focus is on the applicable phase in which the technical effort will be done, the planning extends to a summary of the technical efforts that are planned for future applicable phases.

6.1.4 The SEMP is a tailorable document that captures a project's current and evolving systems engineering strategy and its relationship with the overall project management effort throughout the life cycle of the system. The SEMP's purpose is to guide all technical aspects of the project.

6.1.5 The SEMP is consistent with higher level SEMPs and the project plan.

6.1.6 The content of a SEMP for an in-house technical effort may differ from an external technical effort. For an external technical effort, the SEMP should include details on developing requirements for source selection, monitoring performance, and transferring and integrating externally produced products to NASA. (See Appendix D for further details.)

6.1.7 The SEMP provides the basis for generating the contractor engineering plan.

6.2.1 Working with the program/project manager, the technical team determines the appropriate level within the system structure at which SEMPs are to be developed, taking into account factors such as number and complexity of interfaces, operating environments, and risk factors.

6.2.2 The technical team establishes the initial SEMP early in the Formulation phase and updates it as necessary to reflect changes in scope or improved technical development.

6.2.3 The technical teams shall define in the project SEMP how the required 17 common technical processes, as implemented by Center documentation, including tailoring, will be recursively applied to the various levels of project product layer system structure during each applicable life-cycle phase [SE-58]. The technical teams will have their approaches approved by the Designated Governing Authority (DGA). (See SE Handbook).

6.2.4 The technical team baselines the SEMP per the Center's procedures and policies at SRR for projects and single-project programs and System Definition Review (SDR) for loosely coupled programs, tightly coupled programs, and uncoupled programs. The content of Appendix D should be used as a guide. At the discretion of the project manager and the DGA, for a small project the material in the SEMP can be placed in the project plan's technical summary and the annotated outline in Appendix D used as a topic guide.

6.2.5 As changes occur, the SEMP will be updated by the technical team, reviewed and reapproved by both the DGA and the program/project manager, and presented at subsequent milestone reviews or their equivalent. The SEMP is updated at major milestone reviews through the SIR.

6.2.6 The technical team shall ensure that any technical plans and discipline plans are consistent with the SEMP and are accomplished as fully integrated parts of the technical effort [SE-59].

6.2.7 The technical team shall establish Technical Performance Measures (TPMs) for the project that track/describe the current state versus plan [SE-60]. These measures are described in the SEMP per Appendix D.

6.2.8 The technical team shall report the TPMs to the program/project manager on an agreed-to reporting interval [SE-61].

6.2.9 A technical leading indicator is a subset of the TPMs that provides insight into the potential future states. The technical team shall ensure that the set of TPMs include the following leading indicators:

a. Mass margins for projects involving hardware [SE-62].

b. Power margins for projects that are powered [SE-63].

6.2.10 The technical team shall ensure that the set of Review Trends includes closure of review action documentation (Request for Action, Review Item Discrepancies, and/or Action Items as established by the project) for all software and hardware projects [SE-64].

Acceptable Risk: The risk that is understood and agreed to by the program/project, governing PMC, Mission Directorate, and other customer(s) such that no further specific mitigating action is required. (Some mitigating actions might have already occurred.)

Activity: A set of tasks that describe the technical effort to accomplish a process and help generate expected outcomes.

Affordability: The practice of balancing system performance and risk with cost and schedule constraints over the system life satisfying system operational needs in concert with strategic investment and evolving stakeholder value.

Approve (with respect to Technology Maturation Products from Appendix F): Used for a product, such as Concept Documentation, that is not expected to be put under classic configuration control but still requires that changes from the "approved" version are documented at each subsequent "update."

Baseline: An agreed-to set of requirements, designs, or documents that will have changes controlled through a formal approval and monitoring process.

Baseline (with respect to Technology Maturation Products from Appendix F): Indicates putting the product under configuration control so that changes can be tracked, approved, and communicated to the team and any relevant stakeholders. The expectation on products labeled "baseline" is that they will be at least final drafts going into the designated review and baselined coming out of the review. Baselining a product does not necessarily imply that it is fully mature at that point in the life cycle. Updates to baselined documents require the same formal approval process as the original baseline.

Bidirectional Traceability: The ability to trace any given requirement/expectation to its parent requirement/expectation and to its allocated children requirements/expectations.

Certification Package: The body of evidence that results from the verification activities and other activities such as reports, special forms, models, waivers, or other supporting documentation that is evaluated to indicate the design is certified for flight/use.

Component Facilities: Complexes that are geographically separated from the NASA Center or institution to which they are assigned but are still part of the Agency.

Concept of Operations (ConOps): Developed early in Pre-Phase A, describes the overall high-level concept of how the system will be used to meet stakeholder expectations, usually in a time sequenced manner. It describes the system from an operational perspective and helps facilitate an understanding of the system goals. It stimulates the development of the requirements and architecture related to the user elements of the system. It serves as the basis for subsequent definition documents and provides the foundation for the long-range operational planning activities.

Contractor: For the purposes of this NPR, an individual, partnership, company, corporation, association, or other service having a contract with the Agency for the design, development, manufacture, maintenance, modification, operation, or supply of items or services under the terms of a contract to a program or project within the scope of this NPR. Research grantees, research contractors, and research subcontractors are excluded from this definition.

Corrective Action: Action taken on a product to correct and preclude recurrence of a failure or anomaly, e.g., design change, procedure change, personnel training.

Critical Event: An event in the operations phase of the mission that is time sensitive and is required to be accomplished successfully in order to achieve mission success. These events must be considered early in the life cycle as drivers for system design.

Customer: The organization or individual that has requested a product and will receive the product to be delivered. The customer may be an end user of the product, the acquiring agent for the end user, or the requestor of the work products from a technical effort. Each product within the system hierarchy has a customer.

Customization: The modification of recommended SE practices that are used to accomplish the SE requirements. Examples of these practices are in Appendix C or in the NASA Systems Engineering Handbook, NASA/SP-2007-6105.

Decision Authority: The individual authorized by the Agency to make important decisions for programs and projects under their authority.

Derived Requirements: Requirements arising from constraints, consideration of issues implied but not explicitly stated in the high-level direction provided by Agency and Center institutional requirements, or factors introduced by the selected architecture and design.

Designated Governing Authority: The Center Director or the person that has been designated by the Center Director to ensure the appropriate level of technical management oversight. For large program/projects, this will usually be the identified Engineering Technical Authority. For small activities/projects, the DGA may be delegated to a line manager or other appropriate technical expert.

Deviation: A documented authorization releasing a program or project from meeting a requirement before the requirement is put under configuration control at the level the requirement will be implemented.

Documentation: Captured information and its support medium that is suitable to be placed under configuration control. Note that the medium may be paper, photograph, electronic storage (digital documents and models), or a combination.

Enabling Products: The life-cycle support products and services (e.g., production, test, deployment, training, maintenance, and disposal) that facilitate the progression and use of the operational end product through its life cycle. Since the end product and its enabling products are interdependent, they are viewed as a system. Project responsibility thus extends to responsibility for acquiring services from the relevant enabling products in each life-cycle phase. When a suitable enabling product does not already exist, the project that is responsible for the end product can also be responsible for creating and using the enabling product. An example is below in Figure A-1.

Figure A-1 - Enabling Product Relationship to End Products

Entrance Criteria: Guidance for minimum accomplishments each program or project fulfills prior to a life-cycle review

Establish (with respect to each process in Chapter 3): Develop policy, work instructions, or procedures to implement process activities.

Expectation: A statement of needs, desires, capabilities, and wants that are not expressed as a requirement (not expressed as a "shall" statement). Once the set of expectations from applicable stakeholders is collected, analyzed, and converted into a "shall" statement, the "expectation" becomes a "requirement." Expectations can be stated in either qualitative (nonmeasurable) or quantitative (measurable) terms. Requirements are always stated in quantitative terms. Expectations can be stated in terms of functions, behaviors, or constraints with respect to the product being engineered or the process used to engineer the product.

Federal Records: All books, papers, maps, photographs, machine-readable materials, or other documentary materials, regardless of physical form or characteristics, made or received by an agency of the U.S. Government under Federal law or in connection with the transaction of public business and preserved or appropriate for preservation by that agency or its legitimate successor as evidence of the organization, functions, policies, decisions, procedures, operations, or other activities of the Government or because of the informational value of the data in them.

Final (with respect to Technology Maturation Products from Appendix F): Applied to products that are expected to exist in a specified form, e.g., minutes and final reports.

Formulation Phase: The first part of the NASA management life cycle defined in NPR 7120.5, where system requirements are baselined, feasible concepts are determined, a system definition is baselined for the selected concept(s), and preparation is made for progressing to the Implementation phase.

Human Systems Integration: An interdisciplinary and comprehensive management and technical process that focuses on the integration of human considerations into the system acquisition and development processes to enhance human system design, reduce life-cycle ownership cost, and optimize total system performance. Human system domain design activities associated with manpower, personnel, training, human factors engineering, safety, health, habitability, and survivability are considered concurrently and integrated with all other systems engineering design activities.