Procedural

Requirements

Effective Date: October 07, 2015

Expiration Date: October 06, 2025

|

NASA Procedural Requirements |

NPR 8831.2F Effective Date: October 07, 2015 Expiration Date: October 06, 2025 |

| | TOC | Change History | Preface | Chapter1 | Chapter2 | Chapter3 | Chapter4 | Chapter5 | Chapter6 | Chapter7 | Chapter8 | Chapter9 | Chapter10 | Chapter11 | Chapter12 | AppendixA | AppendixB | AppendixC | AppendixD | AppendixE | AppendixF | AppendixG | AppendixH | AppendixI | ALL | |

12.1.1 Historically, NASA has contracted for support of its maintenance activities. Typically, contracts would specify a level of effort to be provided, rather than specifying the results to be achieved. However, the following are some of the problems associated with that approach:

a. It provides no incentive for contractors to be innovative or efficient.

b. It is uneconomical for the Government because it hires a "marching army" of contractor employees for a term of employment, instead of contracting for a job to be completed.

c. It may foster a personal services environment wherein NASA is perceived as the "employer" who supervises the efforts of contractor "employees."

d. It can contribute to a breakdown of project discipline (e.g., when the project office becomes concerned with how to keep the contractor busy, unplanned and often unnecessary "extras" may be added to the contractor's tasking).

e. It creates the opportunity for unnecessary enrichment of the labor skill mix, thereby, driving up labor costs.

f. It requires the Government to perform extensive surveillance because, absent clearly stated contract objectives, the contractor should receive continual clarification from Government technical representatives.

12.1.2 NASA's policy is to "Utilize performance-based contracts and best-value principles to the maximum extent feasible and practical to shift cost risk to contractors and maximize competitive pricing." It is also NASA's policy to include risk management as an essential element of the entire procurement process, including contract surveillance. In following these policies, NASA has committed to converting its method of procuring facilities maintenance services from a cost-reimbursement approach to a fixed-price, performance-based contracting approach.

12.2.1 Under the PBC concept, the Government contracts for specific services and outcomes, not resources. Contractor flexibility is increased, Government oversight is decreased, and attention is devoted to managing performance and results and ultimate outcomes.

a. The SOW contains explicit, measurable performance requirements ("what"), eliminates process-oriented requirements ("how"), and includes only minimally essential reporting requirements that are based on risk. The Government employs a measurement method (e.g., project surveillance plan) that is clearly communicated to the contractor and where the contractor is held fully accountable. Incentives can be used, but should be relevant to performance and center on the areas of value to NASA and those of high risk that are within the control of the contractor. The SOW should encourage the use of contractor best practices and also include the requirement for the contractor to use cutting-edge maintenance practices, as utilized in the private sector, to give NASA the best product.

b. NASA's policy is to maximize the use of firm-fixed-price contracts, combined with IDIQ unit-price provisions where necessary. In implementing this policy, as much "core" work as possible should be included in the firm-fixed-price portion of the contract. IDIQ work should be held to a minimum because of its cost.

(1) Fixed-Price Work. To shift cost risk to the contractor, fixed pricing and fixed-unit pricing are used to the maximum extent feasible and practical. Because the contract requirements (time, location, frequency, and quantity) are known or adequate historical data is available to allow a reasonable estimate to be made, the contractor can agree to perform for a total price similar to a single work order. The contractor does not get paid for work that is unsatisfactorily performed or not performed at all, and deductions are made in accordance with the Schedule of Deductions (Section E of the contract).

(2) IDIQ Unit-Price Work. Not every item of work can be adequately quantified at contract inception to allow it to be firm, fixed priced. For example, few can predict the frequency and quantity of environmental spill cleanup actions that may be required over a given year or the exact number of chairs and other preparations required for VIP visits and special occasions two years away. Often, historical data is inadequate to enable fixed pricing certain services. Indefinite quantity contract requirements are performed on an "as ordered" basis. A fixed-unit price to perform one occurrence or a given quantity of each type of work is bid for the requirement implementation. Payment is based on the unit price bid per unit (Section B of the contract) times the number of units performed or on an agreed-to price. Because each instance of IDIQ work is ordered and paid for separately, each delivery order shall be inspected and accepted as being satisfactorily completed before payment is made, as if each were a separate mini-contract. Contract prices for unit-priced tasks include all labor, materials, and equipment for performing that specific work. The unit prices offered are multiplied by the quantity of units estimated to be ordered during the contract term, but only for purposes of proposal evaluation. Work will only be paid for as ordered and completed.

c. The contract should be a completion type (something is accomplished) as opposed to a term/level-of-effort type of contract (effort is expended). If level of effort, staffing levels, or a skill mix of workers is specified, the contract is not performance based.

d. Contractor-Government partnering is highly recommended to achieve mutually supportive goals (see section 12.4, Partnering).

e. The Center Procurement Office should be contacted for assistance. The contracting officer will determine the appropriate contract type.

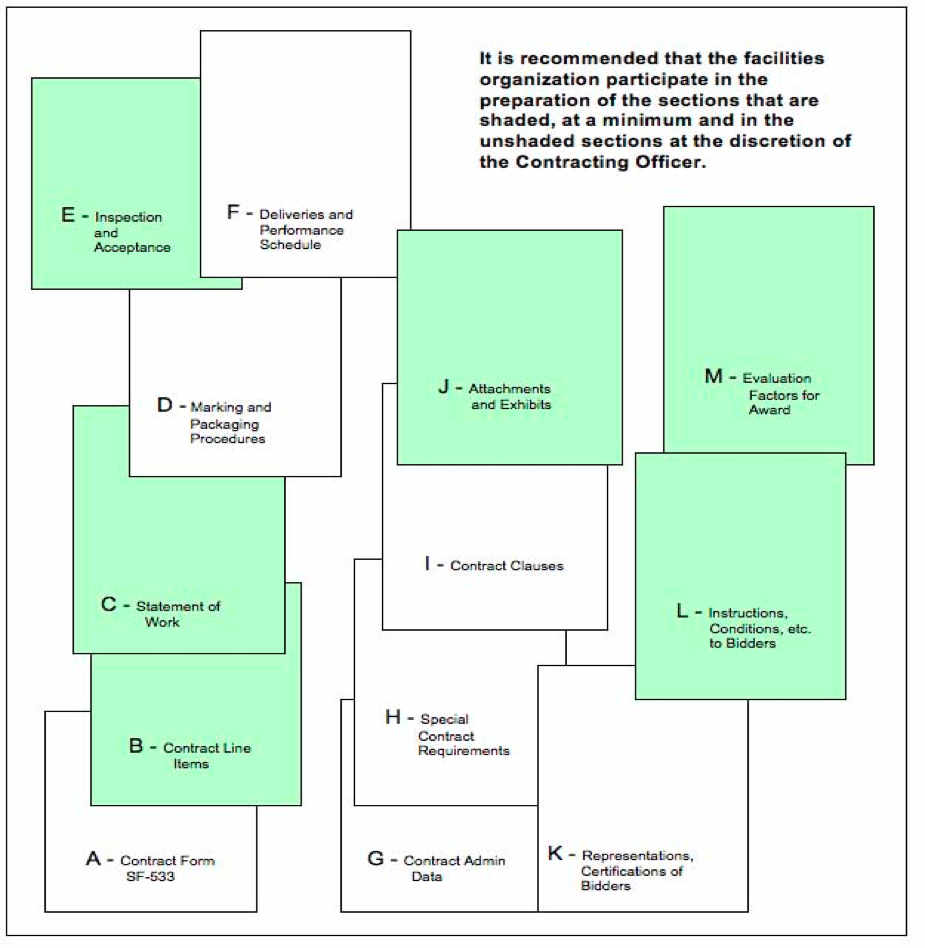

12.2.2 Facility Organization's Responsibilities. The Center's facilities organizations shall work together with the users. It is recommended that the facilities organization participate in the preparation of the activities noted in the sections B, C, E, J, L, and M shown in Figure 12-1, at a minimum, and in the activities noted in the sections A, D, F, G, H, I, and K at the discretion of the contracting officer. This includes identifying all functions and services to be included in the contract, developing the functional tree diagram (which shows the relationships of the functions in the contract), and preparing a WBS for the technical section (Section C) and the Performance Requirements Summary (PRS), which is precisely coordinated with the tree diagram.

Figure 12-1 Contract Sections

12.2.3 The maintenance organization shall ensure the contract states that maintenance data entered in a CMMS is Government property and, as such, be available for Government use and retention for historical purposes, regardless of which, Government or contractor, is responsible for populating and maintaining the database.

12.2.3.1 Where the contractor operates the CMMS, it shall be made clear in the contract that the CMMS maintenance data is Government property and will be turned over to the Government at the end of the contract.

12.2.3.2 The WBS shall include all contract requirements to be purchased.

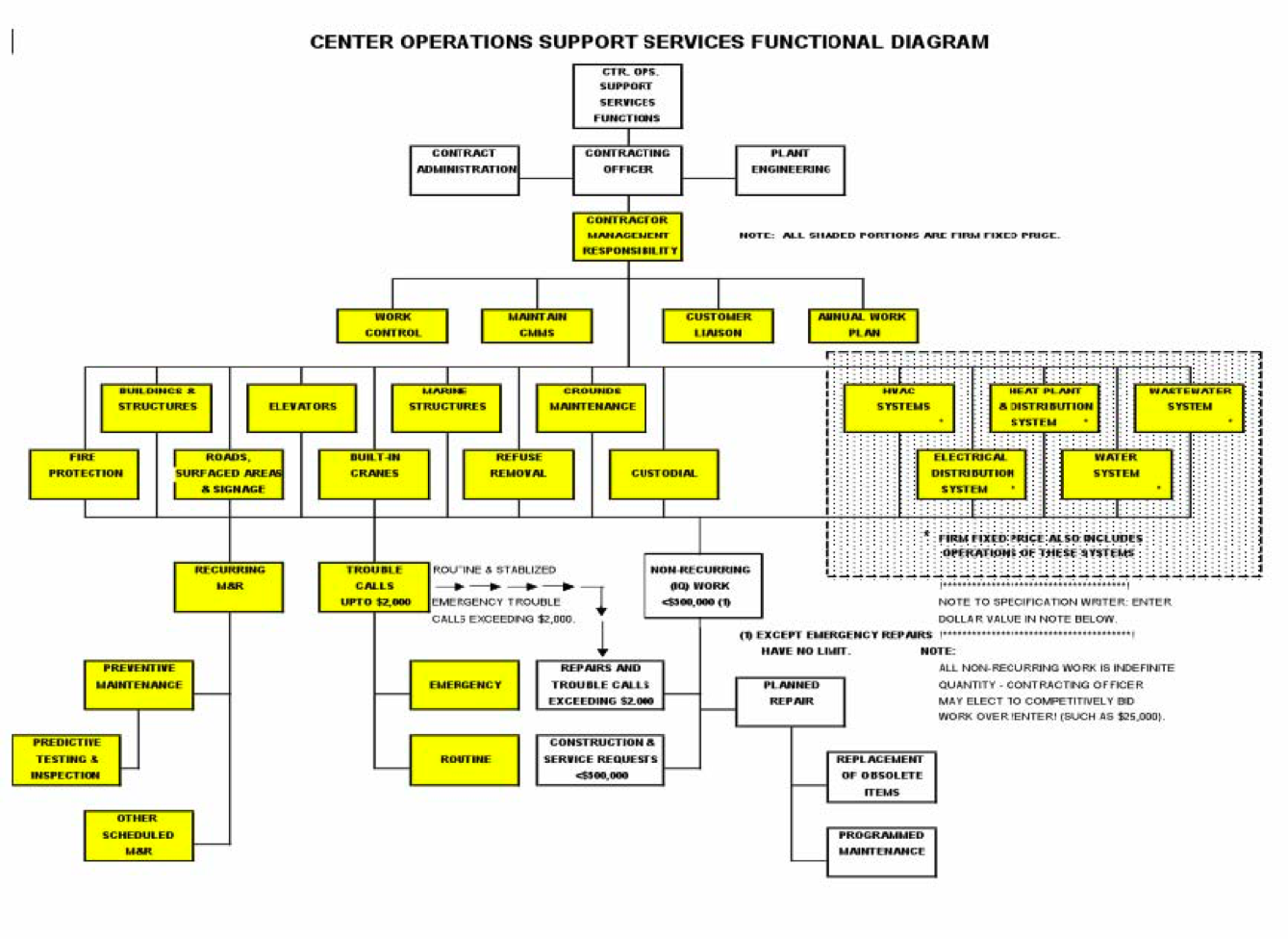

12.2.4 Functional Diagram. Figure 12-2 is an example of a functional diagram at one NASA Center. It represents graphically the highest level of the WBS and should be the starting point in preparing the PBC documentation. It identifies, graphically, each function that is included in the PBC. Each of these functions will be individually addressed and will have a counterpart subsection in Section C of the contract where the requirements, performance indicators, and other supplemental information are discussed. In this specific example, each shaded box represents a function discussed in the technical sections of the contract Subsections C.8 through C.27. The large hashed-shaded area indicates that the five functions within it include operations support as well as maintenance. The white box functions are not in the contract, but are shown to indicate relationships. Functional diagrams will vary by Center, depending on the functions being contracted. However, their preparation and use are important and are the basis of the WBS and the contract documentation.

12.3.1 Performance-based specifications can be stated in terms of outputs or outcomes. For example, a typical performance-based contract will have numerous output requirements for maintaining facilities, such as performing PM, testing and treating circulating water in HVAC cooling towers, and performing certain operational checks. An outcome requirement, however, simply might be that "buildings are available and fully functional to the user when needed" and integrates all service necessary to produce that result. Contractor flexibility is increased by allowing the contractor to decide what work tasks are needed and to propose cutting-edge technologies and techniques that may be more effective than traditional approaches. Government oversight is decreased, and attention is devoted to managing final results. A certain amount of risk is introduced for NASA by transferring additional responsibility to the contractor and, therefore, is not appropriate for all functions. The use of output (versus outcome) requirements is suggested for the following circumstances:

a. The Center determines that the criticality of the function is too important to allow a contractor to deviate from proven work methods.

b. There is a mandated regulation or operational procedure that requires a specific work method to be followed.

c. A procedural requirement is mandatory for safety considerations.

d. The function has very high visibility and a proven methodology has provided excellent results in the past.

Figure 12-2 Functional Diagram

12.3.2 Use of Metrics. Performance specifications require the identification of a standard of performance for the contractor's work. For example, an appropriate outcome specification may require the contractor to achieve a certain equipment availability. That is an outcome requirement. The metric or indicator associated with that requirement is percent availability. The percentage number that the contractor will achieve is the standard (benchmark), set by the Center based on the current baseline performance that is acceptable to and being achieved by contractor or civil service forces at the Center. Unless these metrics are known, there is no rational basis for which to require a standard, and the use of the outcome specification may not be justified.

12.3.3 Refer to the NASA Guide Performance Work Statement for Center Operations Service Support Addendum (July 1999) for additional, detailed information on outcome specifications.

12.4.1 Partnering describes how well the customer and the contractor work together, i.e., how well they communicate, how they resolve disputes, and how they execute the contract to fulfill each other's needs. It is a commitment by both parties to cooperate, to be fair, and to understand each other's expectations and values. It is an agreement between NASA and the contractor to work cooperatively as a team, to identify and resolve problems, and to achieve mutually beneficial performance and result goals.

12.4.2 Partnering is a relationship between organizations where the following occurs:

a. All parties seek win-win solutions to problems rather than solutions that favor one side.

b. Value is placed on the relationship. There is an interdependence wherein if one party succeeds, all parties will benefit.

c. Trust and openness are a normal part of the relationship. The sharing of ideas and problems without fear of reprisal or exploitation promotes fair and rapid solutions to problems.

d. An environment of cost savings and profitability exists.

e. All understand that no one benefits from the exploitation of the other party.

f. Innovation is encouraged.

g. Each party is aware of the needs and concerns of the other. No actions are taken without first considering the effect they have on each party.

h. Each individual has unique talents and values that add value to the group.

i. Overall performance is improved. Gains for one party help the whole group and are not at the expense of another party.

12.4.3 NASA Centers should seek to partner with their support contractors. Benefits that are usually achieved by participating organizations include improvements in contractor-customer relationships, reduction in claims, reduction in time growth, reduction in cost growth, and fair and mutual interpretations of the specifications.

12.5.1 In contracting for support, it is assumed that the contractor will perform as specified in the contract. Experience has shown that contractors can meet contract requirements with performance ranging from a minimum of acceptable to a top performance of excellent. Incentives in Government service contracts are generally more negative than positive, with emphasis on deductions in invoice for poor work or work not performed. Rather than just deductions, incentives can be used to encourage the contractor to expend effort and resources and employ cutting-edge, breakthrough maintenance practices as used in industry to attain top performance. Following are examples of incentives that could be used.

12.5.2 Incentive Fee. An incentive-fee provision can be included in a contract to encourage the contractor, through a suitable monetary incentive, to provide the management, equipment, materials, labor, and supervision necessary for performance improvement. Most often when positive fee-type incentives are used, the fee starts at 100 percent and then is reduced for subjective opinions of areas of dissatisfaction. A better incentive is the reverse, i.e., starting at zero and increasing for areas or instances of greater, objectively measurable performance.

12.5.3 Award Term. The award term is an innovative incentive approach, similar to ones used in private industry. This incentive approach potentially allows continued performance of the contracted effort for an additional period of time, not to exceed some specific potential total contract period, based on overall contractor performance. A provision for a reduction in the contract term for poor contractor performance, such as up to 18 months, could also be included. As an example of an award-term contract, a contract base period could be two or three years with the first year being a startup period wherein the evaluation results would not be included in any award-term decision. Each subsequent year, the contractor's technical performance would be evaluated and the results would be used to reduce, maintain, or increase the contract term depending on the contractor's performance. The performance requirements could also increase over a period of time. For example, if the contract is a three-year core-term contract. If performance is rated as very good for the second and third years, then years four and five are added. If the 4th-year rating is excellent, a 6th year is added. If the 5th year is rated excellent, a 7th year is added, and so on for a maximum contract term of 10 years. An award-term evaluation plan will be used in this process.

12.6.1 QA is a program undertaken by NASA to provide some measure of the quality of goods and services purchased from a contractor. How much QA is necessary depends on the quality of the contractor, criticality of the services, and the nature, amount, and assumption of risk involved. The QA plan should be developed concurrently with the Performance Work Statement, Section C, since the latter defines the work outputs and the quality standards, while the former defines how the work outputs will be observed and measured.

12.6.2 Risk Management. Risk management is an organized method of identifying and measuring risk and developing, selecting, and managing options for handling these risks. It is NASA's policy to include risk management as an essential element of the entire procurement process, including contract surveillance. It implies the control of future events is proactive rather than reactive and comprises four elements:

a. Risk Assessment. Identifies and assesses all aspects of the contract requirements and contractor performance where there is an uncertainty regarding future events that could have a detrimental effect on the contract outcome and on NASA programs and projects. As the contract progresses, previous uncertainties will become known and new uncertainties will arise.

b. Risk Analysis. Once risks are identified, each risk should be characterized as to the likelihood of its occurrence and the severity of its potential consequences. The analysis should identify early warning signs that a problem is going to arise.

c. Risk Treatment. After a risk has been assessed and analyzed, action is taken. Actions include the following:

(1) Transfer. Transfer the risk to the contractor. For example, modify the contract requirements so that the contractor has more or less direct control over the outcome.

(2) Avoidance. Determine that the risks are so great that the current method is removed from further consideration and an alternative solution is found. For example, delete a specific element of work from the contract to have it assumed by the on-site researchers.

(3) Reduction. Minimize the likelihood that an adverse event will occur or minimize the risk of the outcome to the NASA program or project. For example, increase the frequency of surveillance, change the type of surveillance or identify alarm situations, and promptly meet with the contractor to resolve this and future potential occurrences.

(4) Assumption. Assume the risk if it can be effectively controlled, if the probability of risk is small, or if the potential damage is either minimal or too great for the contractor to bear. For example, allow the contractor's own QC of certain custodial functions at a remote location be the sole QA surveillance method for the Center for that work.

(5) Sharing. When the risk cannot be appropriately transferred, or is not in the best interest of the Center to assume the risk, the Center and contractor may share the risk.

d. Lessons Learned. After problems have been encountered, the Center should document any warning signs that, with hindsight, preceded the problem, what approach was taken, and what the outcome was. This will not only help future acquisitions but could help identify recurring problems in the existing contract.

12.6.3 As part of the cost-conscious emphasis practiced throughout NASA, it is undesirable to perform a 100-percent inspection on all work performed, but rather, considering risk as discussed in section 12.6.2, select the optimum combination of inspection methods, frequencies, and populations The contract vehicle is a combination firm-fixed-price and IDIQ-negotiated procurement based on evaluating technical and cost proposals and past performance.

12.6.3.1 The Request for Technical Proposals' evaluation criteria heavily consider past performance and require the offerors (and their subcontractors) to address how they intend to meet the quality standards for the specific contract.

12.6.3.2 Award is based on a best-value consideration of price and technical merit and past performance.

12.6.3.3 A partnering concept and agreement are in force to reduce adversarial relationships and foster a team approach to providing the required services.

12.6.3.4 In general, this approach starts with minimal performance evaluation, recognizing the high expectations of good performance from a quality contractor. The follow-on degree and type of monitoring of the contractor's work depends on the overall performance and the perception of increased or decreased risk to the desired outcomes. Closer scrutiny may be in order if there is a downward trend in performance, if the degree of unacceptable risk increases, or if the performance is otherwise unacceptable. Less frequent inspections or a less stringent method may be selected if the contractor's performance is constantly superb, if there is a greater comfort level in risk to the desired outcome, and if there is a high degree of satisfaction. The key is flexibility in assigning available Quality Assurance Evaluators assets where they are needed most. Consult the NASA GPWS for COSS for a more detailed discussion of the QA program.

12.6.4 Quality Assurance Methods of Surveillance. There are seven generally recognized QA surveillance methods. The successful QA plan, considering the number of QAEs, will use a combination of any or all of these, based on the population of items inspected, their characteristics and criticality, and the location of the service. Where sufficient Government QAEs are not available, a third party (contractor) could be used to perform the QA function for the Government. These seven methods are as follows:

a. 100-percent Inspection. Usually used for services that are considered critically important, have no redundancy, have relatively small monthly populations, or are included in the indefinite quantity portion of the contract.

b. Random Sampling. Uses statistical theory to determine the performance of the whole while evaluating only a sample that, when applied to a sample population, will be indicative of the whole. The use of an ISO-9000-type QA program is predicated on a properly selected, statistical sample. Random sampling tables are used to determine the required sample sizes, and random number generators are used to determine the samples to be evaluated. Random sampling is useful when evaluating a large, homogeneous population.

c. Planned Sampling. Similar to random sampling (less the statistical accuracy) in that it is based on evaluating only a portion of the work for estimating the contractor's performance. Samples are selected based on subjective rationale, and the sample sizes are arbitrarily determined. Planned sampling is most useful when population sizes are not large or homogeneous enough to make random sampling practical.

d. Unscheduled Inspections. These types of inspections should not be used as the primary surveillance method but, rather, in a supportive role. This inspection method may be used where there has already been an indication of poor performance or excessive complaints. The additional, unscheduled inspection could confirm the situation.

e. Validated Customer Feedback. A valuable method of evaluating the contractor's performance with minimal QA assets expended. It is important that the QAE validates all feedback prior to addressing the situation with the contractor. This evaluation method is most valuable for routine, recurring, and noncritical work such as custodial services, grounds maintenance, and refuse collection.

f. RCM Metrics and Trends. Another surveillance method is the use of RCM-based metrics and reliability trending. The QAE can use metrics to assess the performance and effectiveness of maintenance actions as discussed in section 7.8.7, Performance-based Contract Monitoring. See Appendix G for some of the metrics that may be used for this QA method.

g. Contractor-Centered Quality Control. Obtaining self-assessment feedback from the contractor's program and validating it, as necessary, is the least labor-intensive method for NASA QAEs. It relies on the quality of the contractor's own QC program. It is best used when the contractor's performance is repeatedly excellent and reliable, the work is relatively noncritical, and it is in conjunction with other inspection methods. In addition to the contractor's QC program, the contractor may be required to perform QA of the QC program. In the contractor's QA program, the contractor would have a specific approach to monitoring end services to ensure that they have been performed in accordance with the specifications and that the QC program is performing satisfactorily. The contractor QA reports could be used by the QAE as one input in evaluating the contractor's performance.

12.6.5 Performance Requirements Summary

12.6.5.1 The Performance Requirements Summary summarizes the work requirements, standards of performance, and Maximum Allowable Defect Rates (MADRs) for each contract requirement. It is used by the QAEs in the QA program and by the Contracting Officer in making payment deductions for unsatisfactory performance or nonperformance of the contract requirements.

12.6.5.2 Maximum Allowable Defect Rates. MADRs are defect rates, or a specific number of defects, above which the contractor's quality control is considered unsatisfactory for any particular work requirement. The MADR value selected for any particular work requirement should reflect that requirement's importance. For example, the MADR for timely emergency TC response should be less than that for routine TC response. It is important to understand that in fixed-price contracts, the contractor does not get paid for work not performed or that is unacceptable relative to the performance requirements summary, regardless of the MADR. However, the MADR is that point where the contractor should receive a formal notice of deficiency or where more serious administrative action is warranted. There is no need for the Government to advise the contractor of how much leeway is authorized for nonperformance and, therefore, no requirement to advise the contractor of the value of the MADR.

12.6.6 Quality Assurance Plans. QA plans are systematic procedures that, in a planned and uniform manner, provide guidance for the QAEs in their methods and degree of scrutiny to be used in surveillance of contract-performance requirements. Each QA plan may have one or more surveillance guides for inspecting subtasks. Items to be addressed include the following:

a. Identification of the contract requirements.

b. Work requirements and standards of performance.

c. Primary methods of surveillance to be employed.

d. Maximum allowable defect rate.

e. Quantity of work to be performed.

f. Level of surveillance to be employed.

g. Size of the sample to be evaluated.

h. Evaluation procedures.

i. How the results will be analyzed.

12.6.6.1 Each QA plan is a self-contained document written in sufficient detail to preclude extensive reference to other documents or manuals. The use of QA plans ensures conformity, consistency, and standardization in how QA inspections and evaluations will be made over time and between different QAEs monitoring like functions.

12.6.6.2 QA plans can be modified and should be maintained up to date as necessary. The QA plan supplements, but is not part of, the contract and, as such, the contractor should be advised of the existence and use of a formal QA plan but not provided access to it.

12.6.7 Quality Assurance Evaluator Staffing. The QAE assists in evaluating the adequacy of the contractor's performance under each work requirement in the Schedule of Prices (Section B of the contract). The following are specific QAE responsibilities:

a. Accomplishing surveillance required by the contract surveillance plan.

b. Completing and submitting to the COR inspection reports as required in the contract surveillance plans.

c. Recommending to the COR the verification of satisfactorily completed work, payment deductions, liquidated damages, and other administrative actions for poor or nonperformed work.

d. Assisting the COR in identifying necessary changes to the contract, preparing Government estimates, and maintaining work files.

e. Making recommendations to the COR regarding changes or revisions to the PWS and contract surveillance plan.

f. Maintaining accurate and up-to-date documentation records of inspection results and follow-on actions by the contractor.

12.6.7.1 Minimization. Ideally, QAE staffing should be based on a predetermined number of contract inspections and related work requirements rather than on the availability of QAEs. Realistically, personnel constraints dictate that flexibility be used and the number of QAEs determined by adjusting the degree of QA performed in terms of population and degree of scrutiny from month-to-month, depending on the contractor's performance for the previous period and the criticality of the work being performed. QA evaluations, based solely on customer feedback and documentation for relatively routine, noncritical work, require very few, if any, QAEs. One hundred-percent inspections of critical, research-related processes, on the other hand, would likely require an extraordinary amount of QAE support. Where adequate staffing is not available, all or part of the QA function may be contracted to a third party as a solution.

12.6.7.2 QAE Qualifications. Personnel tasked with monitoring the contractor's performance shall be experienced in the technical area being evaluated and adequately trained in QA methods and procedures. Skills required include QA plan development, inspection techniques, PT&I techniques (if appropriate), and contract administration skills such as documentation, making deductions, and calculating recommended payments.

12.7.1 As a means of reducing contract administration, small IDIQ purchases are successfully being procured by credit cards at several NASA Centers. NASA management issues Government credit cards to various authorized Government employees for use in obtaining materials, equipment, and work or services for the Center. When the contractor is contacted by the authorized cardholder requesting work or services, the contractor and requestor define and mutually agree on the task to be provided. Once agreement is reached concerning the scope, schedule, and fixed price to accomplish the task, a credit card is presented by the requestor and accepted by the authorized contractor representative to consummate and document the understanding. All transactions and historical information shall be recorded in the CMMS.

| TOC | Change History | Preface | Chapter1 | Chapter2 | Chapter3 | Chapter4 | Chapter5 | Chapter6 | Chapter7 | Chapter8 | Chapter9 | Chapter10 | Chapter11 | Chapter12 | AppendixA | AppendixB | AppendixC | AppendixD | AppendixE | AppendixF | AppendixG | AppendixH | AppendixI | ALL | |

| | NODIS Library | Program Management(8000s) | Search | |

This document does not bind the public, except as authorized by law or as incorporated into a contract. This document is uncontrolled when printed. Check the NASA Online Directives Information System (NODIS) Library to verify that this is the correct version before use: https://nodis3.gsfc.nasa.gov.